May 29 12:27:37 proxmox06 pvedaemon[844439]: <root@pam> adding node proxmox04 to cluster

May 29 12:27:37 proxmox06 pmxcfs[3283024]: [dcdb] notice: wrote new corosync config '/etc/corosync/corosync.conf' (version = 14)

May 29 12:27:38 proxmox06 corosync[3280084]: [CFG ] Config reload requested by node 3

May 29 12:27:38 proxmox06 corosync[3280084]: [TOTEM ] Configuring link 0

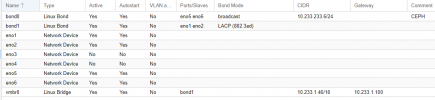

May 29 12:27:38 proxmox06 corosync[3280084]: [TOTEM ] Configured link number 0: local addr: 10.233.1.46, port=5405

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 0)

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 has no active links

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 has no active links

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

May 29 12:27:38 proxmox06 corosync[3280084]: [KNET ] host: host: 1 has no active links

May 29 12:27:38 proxmox06 pmxcfs[3283024]: [status] notice: update cluster info (cluster name Proxmoxcluster2, version = 14)

May 29 12:27:46 proxmox06 corosync[3280084]: [KNET ] rx: host: 1 link: 0 is up

May 29 12:27:46 proxmox06 corosync[3280084]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

May 29 12:27:46 proxmox06 corosync[3280084]: [KNET ] pmtud: PMTUD link change for host: 1 link: 0 from 469 to 1397

May 29 12:27:47 proxmox06 corosync[3280084]: [QUORUM] Sync members[3]: 1 2 3

May 29 12:27:47 proxmox06 corosync[3280084]: [QUORUM] Sync joined[1]: 1

May 29 12:27:47 proxmox06 corosync[3280084]: [TOTEM ] A new membership (1.2184) was formed. Members joined: 1

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [dcdb] notice: members: 1/12974, 2/204349, 3/3283024

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [dcdb] notice: starting data syncronisation

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [status] notice: members: 1/12974, 2/204349, 3/3283024

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [status] notice: starting data syncronisation

May 29 12:27:47 proxmox06 corosync[3280084]: [QUORUM] Members[3]: 1 2 3

May 29 12:27:47 proxmox06 corosync[3280084]: [MAIN ] Completed service synchronization, ready to provide service.

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [dcdb] notice: received sync request (epoch 1/12974/00000002)

May 29 12:27:47 proxmox06 pmxcfs[3283024]: [status] notice: received sync request (epoch 1/12974/00000002)

May 29 12:27:55 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 11 12 13

May 29 12:27:58 proxmox06 pvedaemon[1712750]: starting termproxy UPID:proxmox06:001A226E:9C9DCDD1:64747E2E:vncshell::root@pam:

May 29 12:27:58 proxmox06 pvedaemon[832679]: <root@pam> starting task UPID:proxmox06:001A226E:9C9DCDD1:64747E2E:vncshell::root@pam:

May 29 12:27:58 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 1a 1b 11 12 13

May 29 12:27:58 proxmox06 pvedaemon[831865]: <root@pam> successful auth for user 'root@pam'

May 29 12:27:58 proxmox06 systemd[1]: Created slice User Slice of UID 0.

May 29 12:27:58 proxmox06 systemd[1]: Starting User Runtime Directory /run/user/0...

May 29 12:27:58 proxmox06 systemd[1]: Finished User Runtime Directory /run/user/0.

May 29 12:27:58 proxmox06 systemd[1]: Starting User Manager for UID 0...

May 29 12:27:58 proxmox06 systemd[1712760]: Queued start job for default target Main User Target.

May 29 12:27:58 proxmox06 systemd[1712760]: Created slice User Application Slice.

May 29 12:27:58 proxmox06 systemd[1712760]: Reached target Paths.

May 29 12:27:58 proxmox06 systemd[1712760]: Reached target Timers.

May 29 12:27:58 proxmox06 systemd[1712760]: Listening on GnuPG network certificate management daemon.

May 29 12:27:58 proxmox06 systemd[1712760]: Listening on GnuPG cryptographic agent and passphrase cache (access for web browsers).

May 29 12:27:58 proxmox06 systemd[1712760]: Listening on GnuPG cryptographic agent and passphrase cache (restricted).

May 29 12:27:58 proxmox06 systemd[1712760]: Listening on GnuPG cryptographic agent (ssh-agent emulation).

May 29 12:27:58 proxmox06 systemd[1712760]: Listening on GnuPG cryptographic agent and passphrase cache.

May 29 12:27:58 proxmox06 systemd[1712760]: Reached target Sockets.

May 29 12:27:58 proxmox06 systemd[1712760]: Reached target Basic System.

May 29 12:27:58 proxmox06 systemd[1712760]: Reached target Main User Target.

May 29 12:27:58 proxmox06 systemd[1712760]: Startup finished in 130ms.

May 29 12:27:58 proxmox06 systemd[1]: Started User Manager for UID 0.

May 29 12:27:58 proxmox06 systemd[1]: Started Session 8264 of user root.

May 29 12:28:00 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 1a 11 12 13

May 29 12:28:05 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 1a 11 12 13

May 29 12:28:10 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 1a 11 12

May 29 12:28:13 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 11 12 1a

May 29 12:28:18 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 11 12 1a 20

May 29 12:28:23 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 11 12 1a 20

May 29 12:28:25 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 11 12 1a 20

May 29 12:28:31 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 11 12 1a 20

May 29 12:28:33 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 11 12 1a 20

May 29 12:28:36 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 26 11 12 1a

May 29 12:28:41 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 26 11 12 1a

May 29 12:28:46 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 26 28 11 12 1a

May 29 12:28:46 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 22 26 28 11 12 1a

May 29 12:28:48 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 28 12 1a

May 29 12:28:51 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 28 2a 12 1a

May 29 12:28:53 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 28 2a 1a

May 29 12:28:58 proxmox06 corosync[3280084]: [TOTEM ] Retransmit List: 18 19 2a 2c 1a