Hi all,

I think the whole mess came up because I tried to setup a cluster between two different pve versions, but I am not sure about that. I managed to upgrade both machines to 7.4-16, but still the system seems to be messed up.

The cluster join still fails.

This is what I get:

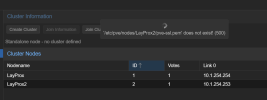

Cluster "host":

The new node shows up but does not turn green.

Error message pve-ssl.pem does not exist!

Cluster "client":

Node is stuck in the Join task

Latest output: "Request addition of this node"

In the end this failed cluster join leads to a fatal situation:

After a hard reset the host is not able to start the VMs any more because of a broken cluster configuration?!

one can force the start by executing

The only way out is to remove the cluster and the node directories with:

<Reboot>

On the other node it is similar. I tried to cleanup all the cluster and corosync files.

Now I am a little bit stuck.

I do have both pves on the same version.

Time is synced up and also same time zone (if that makes a difference.

I tried to cleanup all filles I thought involved.

Always the same. When I think I am clean and well prepared. I create a new cluster (fine). Then I add the node... stuck.

Hope you can help.

I think the whole mess came up because I tried to setup a cluster between two different pve versions, but I am not sure about that. I managed to upgrade both machines to 7.4-16, but still the system seems to be messed up.

The cluster join still fails.

This is what I get:

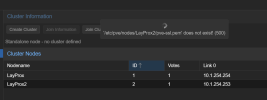

Cluster "host":

The new node shows up but does not turn green.

Error message pve-ssl.pem does not exist!

Cluster "client":

Node is stuck in the Join task

Latest output: "Request addition of this node"

In the end this failed cluster join leads to a fatal situation:

After a hard reset the host is not able to start the VMs any more because of a broken cluster configuration?!

one can force the start by executing

pvecm expected 1The only way out is to remove the cluster and the node directories with:

Code:

rm -f /etc/pve/cluster.conf /etc/pve/corosync.conf

rm -f /etc/cluster/cluster.conf /etc/corosync/corosync.conf

rm /var/lib/pve-cluster/.pmxcfs.lockfile

Code:

rm -rf /etc/pve/nodes/<nodename>On the other node it is similar. I tried to cleanup all the cluster and corosync files.

Now I am a little bit stuck.

I do have both pves on the same version.

Time is synced up and also same time zone (if that makes a difference.

I tried to cleanup all filles I thought involved.

Always the same. When I think I am clean and well prepared. I create a new cluster (fine). Then I add the node... stuck.

Hope you can help.

Last edited: