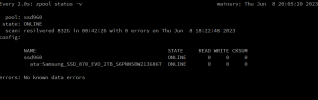

root@mainsrv:/dev# zpool statusCould you runzpool status?

pool: ssd960

state: ONLINE

scan: scrub repaired 0B in 00:41:56 with 0 errors on Sun May 14 01:05:57 2023

config:

NAME STATE READ WRITE CKSUM

ssd960 ONLINE 0 0 0

ata-KINGSTON_SA400S37960G_50026B778439F033 ONLINE 0 0 0

errors: No known data errors