I'm still waiting for some kind of intelligent balooning. Lets say I got this scenario:

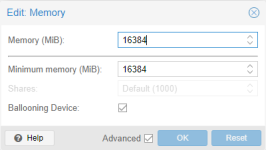

Host: 32GB RAM (4GB used by host itself)

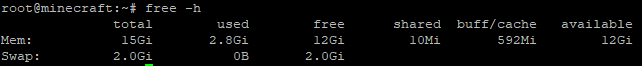

Guest1: 8GB RAM (max), 4GB RAM (min) -> 20% used, 80% cache, 0% free

Guest2: 8GB RAM (max), 4GB RAM (min) -> 80% used, 20% cache, 0% free

Guest3: 8GB RAM (max), 4GB RAM (min) -> 50% used, 0% cache, 50% free

Example above is RAM used 4G (host) + 8GB (Guest1) + 8GB (Guest2) + 4GB (Guest3) = 24GB RAM or 75% of hosts RAM used.

As long as hosts RAM will not exceed 80% balooning will not kick in. And each VM may use full 8GB RAM.

Now lets say the host increases its RAM usage by 2GB from 4GB to 6GB. Hosts RAM usage would now be 26 GB or 81.25% and balooning kicks in.

If all VMs got the same balooning ratio, balooning will limit guests1 RAM to 4GB, guests2 RAM to 4GB, and guests3 RAM to 4GB.

For guest3 that is no problem because half of the RAM is free.

For guest1 that is also no problem because 80% of RAM is used for caching and the VM drops the caches in no time to that point where the guests only got 20% used, 30% cache, 50% free.

But a big problem is guest 2. Here blooning limits the RAM from 8GB to 4GB but 6,4GB are used by processes so many of the processes much be killed by OOM so that everything fitsin 4GB again.

That is very stupid that processes of guest2 get killed by ballooning while guest1 is wasting memory at the some time by still using caching.

As long as ballooning isn't intelligent enough to dynamically reduce the RAM by useage (for example only -10% for guest2 but -70% for guest1 and -40% for guest3) instead of fixed ratios overcommiting RAM just isn't working and you shouldn't assing more RAM to VMs than your host got available.

Only option I see is to buy more RAM or limit the RAM of each VM to that point that it is just enough to work most of the time. But then there is no headroom and every peak hits the limit and causes alot of swapping.

I really miss an option in linux to limit the cache size like I can do it with ZFSs ARCs "zfs_arc_max" option. Completely dropping the cache is bad because it will increase performance. But unlimited cache will waste a lot of RAM other VMs could do better use of. Would be so great if I just could limit the page file caching to 1 or 2 GB.