Hello. I have a problem with Proxmox ( version 7.4.17) cluster of 2 members. It is running over 10 VMs and a CHR Mikrotik to access the internet for the VMs. The replication of the VMs is working fine, but the replication of the CHR Mikrotik is running to error. When I bring it up under the replication menu, it throws the following error (and it is also written to the journalctl log):

vm'c conf file (/etc/pve/qemu-server/110.conf)

Data undere Options menu:

What could be the problem?

Code:

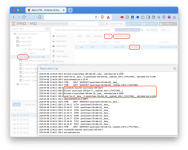

2025-04-07 13:08:29 110-0: start replication job

2025-04-07 13:08:29 110-0: guest => VM 110, running => 2341709

2025-04-07 13:08:29 110-0: volumes => ssd:base-106-disk-0/vm-110-disk-0

2025-04-07 13:08:30 110-0: create snapshot '__replicate_110-0_1744024109__' on ssd:base-106-disk-0/vm-110-disk-0

2025-04-07 13:08:30 110-0: using secure transmission, rate limit: none

2025-04-07 13:08:30 110-0: full sync 'ssd:base-106-disk-0/vm-110-disk-0' (__replicate_110-0_1744024109__)

2025-04-07 13:08:31 110-0: full send of ssd/vm-110-disk-0@__replicate_110-0_1744024109__ estimated size is 135M

2025-04-07 13:08:31 110-0: total estimated size is 135M

2025-04-07 13:08:32 110-0: cannot receive: local origin for clone ssd/vm-110-disk-0@__replicate_110-0_1744024109__ does not exist

2025-04-07 13:08:32 110-0: cannot open 'ssd/vm-110-disk-0': dataset does not exist

2025-04-07 13:08:32 110-0: command 'zfs recv -F -- ssd/vm-110-disk-0' failed: exit code 1

2025-04-07 13:08:32 110-0: warning: cannot send 'ssd/vm-110-disk-0@__replicate_110-0_1744024109__': signal received

2025-04-07 13:08:32 110-0: cannot send 'ssd/vm-110-disk-0': I/O error

2025-04-07 13:08:32 110-0: command 'zfs send -Rpv -- ssd/vm-110-disk-0@__replicate_110-0_1744024109__' failed: exit code 1

2025-04-07 13:08:32 110-0: delete previous replication snapshot '__replicate_110-0_1744024109__' on ssd:base-106-disk-0/vm-110-disk-0

2025-04-07 13:08:32 110-0: end replication job with error: command 'set -o pipefail && pvesm export ssd:base-106-disk-0/vm-110-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_110-0_1744024109__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=cluster01' root@172.16.1.1 -- pvesm import ssd:base-106-disk-0/vm-110-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_110-0_1744024109__ -allow-rename 0' failed: exit code 1vm'c conf file (/etc/pve/qemu-server/110.conf)

Code:

balloon: 0

boot: order=scsi0;ide2;net0

cores: 4

ide2: none,media=cdrom

memory: 2048

meta: creation-qemu=7.2.0,ctime=1698644063

name: 172.16.1.220-CHR

net0: virtio=9E:55:A7:3B:54:32,bridge=vmbr1,firewall=1

net1: virtio=06:AE:AD:55:DA:56,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: ssd:base-106-disk-0/vm-110-disk-0,cache=writethrough,iothread=1,size=2G

scsihw: virtio-scsi-single

smbios1: uuid=d1daddb1-4062-4854-86a5-6589975e9d2a

sockets: 1

vmgenid: f9c01868-093b-48e1-9225-9f9f1f720e4fData undere Options menu:

What could be the problem?