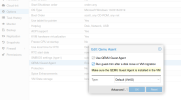

We shutted down a windows VM to change the SCSI interface of the RBD Disk to SATA.

everything worked well. the next day we noticied after our upgrade maintenance during the process of reverting from SATA to SCSI ,

the drive was showing 5TB !

we first tough this was a bug, but no it is now really showing up in backup and disk as 5TB drive.

the node was running 7.4.3 and i dont see any task log related to that except updating the disk interface.

this is a serious one.

everything worked well. the next day we noticied after our upgrade maintenance during the process of reverting from SATA to SCSI ,

the drive was showing 5TB !

we first tough this was a bug, but no it is now really showing up in backup and disk as 5TB drive.

the node was running 7.4.3 and i dont see any task log related to that except updating the disk interface.

this is a serious one.