We are just finishing up our in-place upgrade from vSphere to Proxmox. The usual story: the licensing from Broadcom was crazy, so they got the finger. So a really hairbrained scheme to do it in-place, however, due to lots of planning, it went pretty smoothly. The only real hiccup was that one of the caddies used to hold the SSDs being moved from the storage array to be up the front of the servers got broken.

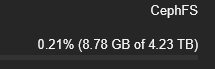

Anyway, one of the last jobs is to sort out ISO storage. The boot drives (think Lenovo equivalent of the Dell BOSS) are only 240GB, so not really enough for ISO storage. We have 140GB of ISO's that were in a folder on a VMFS file system. I created a Ceph file system on the Ceph storage and copied in the ISO's. I then noticed the Ceph file system is 1.4TB in size, which is ridiculous for ISO storage and is gobbling up precious SSD storage for the VMs. However, the usage on the Ceph dashboard only went up by the 140 GB of ISO's I copied in.

Is there a way to control the size of a CephFS created on top of the Ceph RDB, or is the spare space in the CephFS still available for VMs? I am not really a fan of over-committing storage because it can go horribly wrong if you use it all up, so I would prefer to limit the size of the CephFS to something more suitable and commit the storage if that is possible.

Anyway, one of the last jobs is to sort out ISO storage. The boot drives (think Lenovo equivalent of the Dell BOSS) are only 240GB, so not really enough for ISO storage. We have 140GB of ISO's that were in a folder on a VMFS file system. I created a Ceph file system on the Ceph storage and copied in the ISO's. I then noticed the Ceph file system is 1.4TB in size, which is ridiculous for ISO storage and is gobbling up precious SSD storage for the VMs. However, the usage on the Ceph dashboard only went up by the 140 GB of ISO's I copied in.

Is there a way to control the size of a CephFS created on top of the Ceph RDB, or is the spare space in the CephFS still available for VMs? I am not really a fan of over-committing storage because it can go horribly wrong if you use it all up, so I would prefer to limit the size of the CephFS to something more suitable and commit the storage if that is possible.