Hi,

While copying data to a cephfs mounted folder , I encounter tons of messages like this:

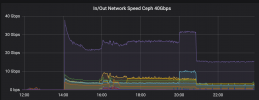

Networking - the copy goes with 30-40Mbps but the backend network is up to 40Gbps.

This issue is only for CephFS - if I copy to a s3 bucket, I have no issues.

Stopping the transfers does not stop the network traffic - only a whole server reboot "solves" the issue.

Any ideas ?

While copying data to a cephfs mounted folder , I encounter tons of messages like this:

Code:

[52122.119364] libceph: osd34 (1)10.0.40.95:6881 bad crc/signature

[52122.119599] libceph: osd9 (1)10.0.40.98:6801 bad crc/signature

[52122.119722] libceph: read_partial_message 00000000a1a2de9f signature check failed

[52122.119993] libceph: osd83 (1)10.0.40.38:6826 bad crc/signature

[52122.121751] libceph: read_partial_message 00000000e45aca08 signature check failed

[52122.122034] libceph: osd60 (1)10.0.40.37:6814 bad crc/signature

[52122.127937] libceph: read_partial_message 00000000e11eff1b signature check failed

[52122.127939] libceph: read_partial_message 00000000bba65e30 signature check failed

[52122.127941] libceph: read_partial_message 000000000445f988 signature check failed

[52122.127941] libceph: read_partial_message 00000000e873cd06 signature check failed

[52122.127948] libceph: osd8 (1)10.0.40.98:6819 bad crc/signature

[52122.127949] libceph: osd78 (1)10.0.40.38:6865 bad crc/signature

[52122.128044] libceph: read_partial_message 00000000dd903166 signature check failed

[52122.128054] libceph: osd38 (1)10.0.40.93:6816 bad crc/signature

[52122.128258] libceph: osd13 (1)10.0.40.97:6809 bad crc/signature

[52122.128521] libceph: osd33 (1)10.0.40.95:6873 bad crc/signature

[52122.128529] libceph: read_partial_message 00000000d5312939 signature check failed

[52122.128795] libceph: read_partial_message 0000000056365bbb signature check failed

[52122.129008] libceph: osd66 (1)10.0.40.37:6804 bad crc/signature

[52122.129251] libceph: osd17 (1)10.0.40.97:6841 bad crc/signatureNetworking - the copy goes with 30-40Mbps but the backend network is up to 40Gbps.

This issue is only for CephFS - if I copy to a s3 bucket, I have no issues.

Stopping the transfers does not stop the network traffic - only a whole server reboot "solves" the issue.

Any ideas ?