Hi everyone.

I'm struggling with ceph using multipath and the pve provided tools.

My setup is a 3 node cluster with each node having a dedicated FC storage.

Each server has 2 HBAs to achieve hardware redundancy.

Multipath is configured, running and the device mapper block devices are present.

So far so good.

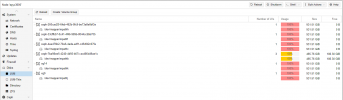

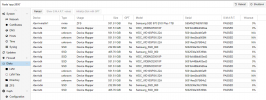

The first thing i see is that the web ui doesn't show the device mapper block devices but instead shows the individual disks only, which is quite annoying.

I would expect to see the dm devices and have devices which are part of a multipath filtered out.

Anyway, i did setup the ceph cluster and now i'm trying to add the dm devices.

The web ui doesn't let me select any of the dm devices and hence no osd creation is possible.

So i tried using `pveceph osd create /dev/dm-0`but it's failing.

I read somewhere that this may be due to blacklisting of device paths. Why are they blacklisted and why isn't this configurable?

Next i tried to use the native ceph tools but it's failing too.

Not really helpful. Looked at the paths and voila the keyring doesn't exist.

So i exported it.

Retry:

Again exported the keyring to '/etc/pve/priv/ceph.client.bootstrap-osd.keyring' but the problem persists.

Ok, so i read about ceph and multipath https://docs.ceph.com/docs/mimic/ceph-volume/lvm/prepare/#multipath-support

"Devices that come from multipath are not supported as-is. The tool will refuse to consume a raw multipath device and will report a message like ...

If a multipath device is already a logical volume it should work, given that the LVM configuration is done correctly to avoid issues."

Ok, so i need to manually create the vg.

/etc/lvm/lvm.conf has multipath_component_detection enabled, so in theory lvm should be aware of the situation.

Still the web ui doesn't offer the dm devices in the LVM section

I created a pv/vg/lv on dm-0

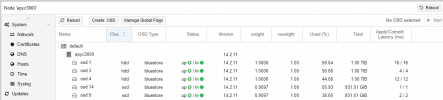

Finally i can create an OSD

No OSD visible in the Web UI.

I manually activated the OSD.

And finally the OSD is visible in the web ui.

Honestly guys, this is really really really a pain in the ...

Multipath is something absolutely common in an enterprise infrastructure and Proxmox should be able to handle this type of setup.

My 2 cents.

I'm struggling with ceph using multipath and the pve provided tools.

My setup is a 3 node cluster with each node having a dedicated FC storage.

Each server has 2 HBAs to achieve hardware redundancy.

Multipath is configured, running and the device mapper block devices are present.

Code:

mpathe (36001b4d0e941057a0000000000000000) dm-4 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:2 sdh 8:112 active ready running

`- 2:0:0:2 sdv 65:80 active ready running

mpathd (36001b4d0e93620000000000000000000) dm-3 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:11 sdq 65:0 active ready running

`- 2:0:0:11 sdae 65:224 active ready running

mpathc (36001b4d0e94100700000000000000000) dm-2 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:10 sdp 8:240 active ready running

`- 2:0:0:10 sdad 65:208 active ready running

mpathb (36001b4d0e940ac000000000000000000) dm-1 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:1 sdg 8:96 active ready running

`- 2:0:0:1 sdu 65:64 active ready running

mpathn (36001b4d0e94107a30000000000000000) dm-13 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:9 sdo 8:224 active ready running

`- 2:0:0:9 sdac 65:192 active ready running

mpatha (36001b4d0e94104000000000000000000) dm-0 SEAGATE,ST2400MM0129

size=2.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 0:0:0:0 sdf 8:80 active ready running

`- 2:0:0:0 sdt 65:48 active ready running

...So far so good.

The first thing i see is that the web ui doesn't show the device mapper block devices but instead shows the individual disks only, which is quite annoying.

I would expect to see the dm devices and have devices which are part of a multipath filtered out.

Anyway, i did setup the ceph cluster and now i'm trying to add the dm devices.

The web ui doesn't let me select any of the dm devices and hence no osd creation is possible.

So i tried using `pveceph osd create /dev/dm-0`but it's failing.

Bash:

-> pveceph osd create /dev/dm-0

unable to get device info for '/dev/dm-0'I read somewhere that this may be due to blacklisting of device paths. Why are they blacklisted and why isn't this configurable?

Next i tried to use the native ceph tools but it's failing too.

Bash:

-> ceph-volume lvm create --data /dev/dm-0

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new a3b9ecd5-20bb-4503-ba0b-f751e12e3e88

stderr: [errno 2] error connecting to the cluster

--> RuntimeError: Unable to create a new OSD idSo i exported it.

Bash:

-> ceph auth get client.bootstrap-osd -o /var/lib/ceph/bootstrap-osd/ceph.keyringRetry:

Bash:

-> ceph-volume lvm create --data /dev/dm-0

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 062eadbd-fa4d-4945-be1c-5c49c85b3208

--> Was unable to complete a new OSD, will rollback changes

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd purge-new osd.0 --yes-i-really-mean-it

stderr: 2020-06-04 13:05:27.729 7f6dbc620700 -1 auth: unable to find a keyring on /etc/pve/priv/ceph.client.bootstrap-osd.keyring: (2) No such file or directory

2020-06-04 13:05:27.729 7f6dbc620700 -1 AuthRegistry(0x7f6db40817b8) no keyring found at /etc/pve/priv/ceph.client.bootstrap-osd.keyring, disabling cephx

stderr: purged osd.0

--> RuntimeError: Cannot use device (/dev/dm-0). A vg/lv path or an existing device is neededAgain exported the keyring to '/etc/pve/priv/ceph.client.bootstrap-osd.keyring' but the problem persists.

Bash:

-> ceph-volume lvm create --data /dev/dm-0

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 82fe501a-22de-45df-bbcc-39c0e1704ba1

--> Was unable to complete a new OSD, will rollback changes

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd purge-new osd.0 --yes-i-really-mean-it

stderr: purged osd.0

--> RuntimeError: Cannot use device (/dev/dm-0). A vg/lv path or an existing device is neededOk, so i read about ceph and multipath https://docs.ceph.com/docs/mimic/ceph-volume/lvm/prepare/#multipath-support

"Devices that come from multipath are not supported as-is. The tool will refuse to consume a raw multipath device and will report a message like ...

If a multipath device is already a logical volume it should work, given that the LVM configuration is done correctly to avoid issues."

Ok, so i need to manually create the vg.

/etc/lvm/lvm.conf has multipath_component_detection enabled, so in theory lvm should be aware of the situation.

Still the web ui doesn't offer the dm devices in the LVM section

I created a pv/vg/lv on dm-0

Code:

pvcreate --metadatasize 250k -y -ff /dev/dm-0

vgcreate vg0 /dev/dm-0

lvcreate -n lv0 -l 100%FREE vg0Finally i can create an OSD

Code:

-> ceph-volume lvm prepare --data vg0/lv0

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new e1c9a9c5-e9b6-447f-ba6e-3b60a6f8e5ff

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0

--> Executable selinuxenabled not in PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

Running command: /usr/bin/chown -h ceph:ceph /dev/vg0/lv0

Running command: /usr/bin/chown -R ceph:ceph /dev/dm-14

Running command: /usr/bin/ln -s /dev/vg0/lv0 /var/lib/ceph/osd/ceph-0/block

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap

stderr: got monmap epoch 2

Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQCn29heqI3xFxAA1OH32hEOZeNpzPGDdIdH0A==

stdout: creating /var/lib/ceph/osd/ceph-0/keyring

stdout: added entity osd.0 auth(key=AQCn29heqI3xFxAA1OH32hEOZeNpzPGDdIdH0A==)

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid e1c9a9c5-e9b6-447f-ba6e-3b60a6f8e5ff --setuser ceph --setgroup ceph

--> ceph-volume lvm prepare successful for: vg0/lv0

Bash:

ceph-volume lvm list

====== osd.0 =======

[block] /dev/vg0/lv0

block device /dev/vg0/lv0

block uuid 45OjiN-ZBFl-Gnci-B7qS-lfDi-fWoZ-c1HdiS

cephx lockbox secret

cluster fsid ed5ba9ab-6eb4-4ad2-aac9-8265e0cf5a02

cluster name ceph

crush device class None

encrypted 0

osd fsid e1c9a9c5-e9b6-447f-ba6e-3b60a6f8e5ff

osd id 0

type block

vdo 0

devices /dev/mapper/mpathaNo OSD visible in the Web UI.

I manually activated the OSD.

Bash:

-> ceph-volume lvm activate osd.0

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/vg0/lv0 --path /var/lib/ceph/osd/ceph-0 --no-mon-config

Running command: /usr/bin/ln -snf /dev/vg0/lv0 /var/lib/ceph/osd/ceph-0/block

Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block

Running command: /usr/bin/chown -R ceph:ceph /dev/dm-14

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-e1c9a9c5-e9b6-447f-ba6e-3b60a6f8e5ff

stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-e1c9a9c5-e9b6-447f-ba6e-3b60a6f8e5ff.service → /lib/systemd/system/ceph-volume@.service.

Running command: /usr/bin/systemctl enable --runtime ceph-osd@0

stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /lib/systemd/system/ceph-osd@.service.

Running command: /usr/bin/systemctl start ceph-osd@0

--> ceph-volume lvm activate successful for osd ID: 0And finally the OSD is visible in the web ui.

Honestly guys, this is really really really a pain in the ...

Multipath is something absolutely common in an enterprise infrastructure and Proxmox should be able to handle this type of setup.

My 2 cents.