We have 4 nodes, each node with a single PCI-E card containing 8x4TB nvme drives, so 32TB x 4nodes = 128TB of RAW storage to allocate to ceph.

Our RBD pool (datastore01) is used for our VM backend storage, and I thought i'd allocated all available space to this pool when I set it up.

The pool is configured (or should be) in an N+2 configuration, so we could loose a maximum of 2 PCI cards are not loose data (don't worry we intend to have a 12 node cluster very soon)

My colleague then created another cephfs pool to storage ISOs across the shared storage so Vs would still migrate if an engineer accidently left a CD/DVD drive attached (migrations previously failed before the isos were local to the host), however it looks like the cephfs has been allocated 23TiB of the total storage and I'm hoping there is a way to steal the majority of that space back for the datastore01 pool. The cephfs/iso pool only needs 200GB MAX!

The output of ceph df is confusing me:

Does this output mean datastore01 has 11TiB storage in it, or 45TiB as represented by the USED column. The USED (45TiB) and Max Available (16TiB) combined = 61TiB which was kind of what I expected usable out of the 128TiB pool with the minimum size set to 2, but I have no where it's got the 45TiB used figure from???

UPDATE:

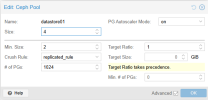

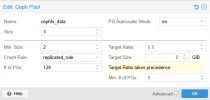

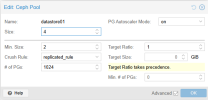

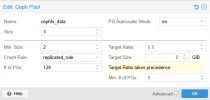

Okay, looking at the cephfs pool settings, I'm going to assume the settings are the cause, but would be good to get recommended settings for my setup:

I'm guessing Size = 1, Min Size = 1 considering it's just ISOs being stored?

Our RBD pool (datastore01) is used for our VM backend storage, and I thought i'd allocated all available space to this pool when I set it up.

The pool is configured (or should be) in an N+2 configuration, so we could loose a maximum of 2 PCI cards are not loose data (don't worry we intend to have a 12 node cluster very soon)

My colleague then created another cephfs pool to storage ISOs across the shared storage so Vs would still migrate if an engineer accidently left a CD/DVD drive attached (migrations previously failed before the isos were local to the host), however it looks like the cephfs has been allocated 23TiB of the total storage and I'm hoping there is a way to steal the majority of that space back for the datastore01 pool. The cephfs/iso pool only needs 200GB MAX!

The output of ceph df is confusing me:

root@mepprox01:~# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

nvme 116 TiB 71 TiB 46 TiB 46 TiB 39.17

TOTAL 116 TiB 71 TiB 46 TiB 46 TiB 39.17

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

datastore01 5 1024 11 TiB 3.01M 45 TiB 41.74 16 TiB

.mgr 6 1 10 MiB 4 30 MiB 0 21 TiB

cephfs_data 9 128 26 GiB 6.73k 79 GiB 0.12 21 TiB

cephfs_metadata 10 16 27 MiB 29 82 MiB 0 21 TiB

Does this output mean datastore01 has 11TiB storage in it, or 45TiB as represented by the USED column. The USED (45TiB) and Max Available (16TiB) combined = 61TiB which was kind of what I expected usable out of the 128TiB pool with the minimum size set to 2, but I have no where it's got the 45TiB used figure from???

UPDATE:

Okay, looking at the cephfs pool settings, I'm going to assume the settings are the cause, but would be good to get recommended settings for my setup:

I'm guessing Size = 1, Min Size = 1 considering it's just ISOs being stored?