Hi guys,

I am trying to get my head around something with my ceph cluster. So I have 3 nodes with ceph storage pool.

I have 8 VM's on the cluster and total usage is sitting at 87% and I am trying to as a rule of thumb never let it go above 85%.

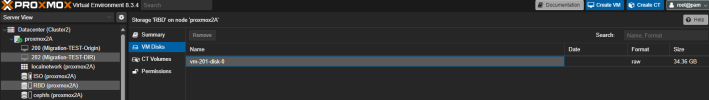

I have a few VM's that are not in need of HA and so dont need to be on the ceph pool. In order to free up space on the ceph pool I migrated their disks to other disks that are just a standard zfs mirror for example.

The query is it doesnt really help my ceph storage pool usage?? I have moved 2 VM's now to new storage and the ceph storage has basically stayed the same. It goes down slightly but then creeps back up.

So the first question is why is the above not reducing my ceph usage by the expected amount> (these VMs are about 25% of my total storage so should drastically reduce usage)

Second questions is, considering I have set a specific disk size for my VM disks, why is ceph storage creeping up in usage at all when the disk allocated is not changing in size. Shouldnt the VM grow its oiwn usage within the assigned disk?

I am trying to get my head around something with my ceph cluster. So I have 3 nodes with ceph storage pool.

I have 8 VM's on the cluster and total usage is sitting at 87% and I am trying to as a rule of thumb never let it go above 85%.

I have a few VM's that are not in need of HA and so dont need to be on the ceph pool. In order to free up space on the ceph pool I migrated their disks to other disks that are just a standard zfs mirror for example.

The query is it doesnt really help my ceph storage pool usage?? I have moved 2 VM's now to new storage and the ceph storage has basically stayed the same. It goes down slightly but then creeps back up.

So the first question is why is the above not reducing my ceph usage by the expected amount> (these VMs are about 25% of my total storage so should drastically reduce usage)

Second questions is, considering I have set a specific disk size for my VM disks, why is ceph storage creeping up in usage at all when the disk allocated is not changing in size. Shouldnt the VM grow its oiwn usage within the assigned disk?