Which log would tell you that a monitor is blocking the communication from one client node to the ceph cluster? What's the best way to see whether the ceph monitors are blocking these two nodes that can't communicate? Again, there are no firewalls between nodes unless Proxmox inserted firewalling in recent updates that I don't know about. The cluster config is to disable the firewalls on all nodes.

[SOLVED] ceph storage not available to a node

- Thread starter walter.egosson

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

At this point, it's looking like Ceph is blocking those clients .. there aren't any networking issues that I can see. I've verified iptables on the ceph nodes and all are empty and set to "ACCEPT" so no firewalling going on. Pings go through just fine from client node to Ceph monitor node. Communication is there but something is blocking. Are there new security measures in place in Ceph that could be loosened up to allow communication once communication is working everywhere put those security measures back in place?

netcat from the other nodes works though? If so, then this is where you should dig deeper.netcat is timing out trying to connect to the monitor node but .. pinging to that same address works fine

Check if the PVE FW is enabled on any of the nodes (iptables -nvL) or if the switch / network config has an affect. Maybe the /etc/network/interfaces file has been changed on these nodes but never applied until the reboot?

Edit: Did not see the answers in the meantime. Hmm, You could check with

ceph auth ls on the Ceph nodes, if the data for client.admin matches the keyring file on non working nodes.

Last edited:

"ceph auth ls" shows client.admin totally different from all nodesnetcat from the other nodes works though? If so, then this is where you should dig deeper.

Check if the PVE FW is enabled on any of the nodes (iptables -nvL) or if the switch / network config has an affect. Maybe the /etc/network/interfaces file has been changed on these nodes but never applied until the reboot?

Edit: Did not see the answers in the meantime. Hmm, You could check withceph auth lson the Ceph nodes, if the data for client.admin matches the keyring file on non working nodes.

client.admin

key: yada yada

caps: [mds] allow *

caps: [mgr] allow *

caps: [mon] allow *

caps: [osd] allow *

on the client nodes none of those "caps:" entries are there .. just the "key:" entry followed by the actual key of course

So this issue is partially solved at this point ... now it has turned into something else

As I previously mentioned, on July 5th, 2021 we moved our Ceph servers over to 25Gb fiber instead of 10Gb copper .. at least that's what we thought.

I turns out it seems there is a bug in the GUI of Proxmox related to ifupdown2 and applying network configuration live. On July 5th, I had changed the IPs from 10Gb NICs over to 25Gb NICs but it turns out the GUI said that there were no IPs on the 10Gb and only on the 25Gb but on the command line I find that the IPs never came off of the 10Gb NICs although in the GUI it showed that nothing was on them.

So this problem of Ceph client nodes not being able to access the Ceph storage turns out to be that the IPs actually got removed on reboot.

The question now is, is this bug in the GUI known about? and in the mean time, is there a recommendation for a smooth transition from 10Gb copper to 25Gb fiber as now I have to do the job, that took 2 hours the first time on a holiday, all over again and I don't believe I can trust the GUI to make the correct NIC IP address changes and actually correctly remove the IPs from the old NICs.

Obviously, this time, I need to be sure that Ceph and Ceph clients will all be running over the 25Gb fiber when finished with the minimal of down time.

So the whole problem turned into a networking issue caused by the Proxmox GUI giving me incorrect information. Please, don't anyone flame me for this, it's simply a statement of fact. I maintain these servers to the latest patch level and have adjusted config files and so on over the years so the cluster is healthy and Ceph is healthy. We are running Ceph on Dell R740 NVMe nodes with 17 NVMe drives per node and each drive is a single OSD. As previously mentioned, the Ceph cluster from the beginning has been IPv6 only and continues to be.

Also, as far as this bug is concerned with not removing IP addresses from NICs although the GUI shows them removed, is this issue gone in Proxmox 7.0? I understand ifupdown2 is standard for 7.0.

As I previously mentioned, on July 5th, 2021 we moved our Ceph servers over to 25Gb fiber instead of 10Gb copper .. at least that's what we thought.

I turns out it seems there is a bug in the GUI of Proxmox related to ifupdown2 and applying network configuration live. On July 5th, I had changed the IPs from 10Gb NICs over to 25Gb NICs but it turns out the GUI said that there were no IPs on the 10Gb and only on the 25Gb but on the command line I find that the IPs never came off of the 10Gb NICs although in the GUI it showed that nothing was on them.

So this problem of Ceph client nodes not being able to access the Ceph storage turns out to be that the IPs actually got removed on reboot.

The question now is, is this bug in the GUI known about? and in the mean time, is there a recommendation for a smooth transition from 10Gb copper to 25Gb fiber as now I have to do the job, that took 2 hours the first time on a holiday, all over again and I don't believe I can trust the GUI to make the correct NIC IP address changes and actually correctly remove the IPs from the old NICs.

Obviously, this time, I need to be sure that Ceph and Ceph clients will all be running over the 25Gb fiber when finished with the minimal of down time.

So the whole problem turned into a networking issue caused by the Proxmox GUI giving me incorrect information. Please, don't anyone flame me for this, it's simply a statement of fact. I maintain these servers to the latest patch level and have adjusted config files and so on over the years so the cluster is healthy and Ceph is healthy. We are running Ceph on Dell R740 NVMe nodes with 17 NVMe drives per node and each drive is a single OSD. As previously mentioned, the Ceph cluster from the beginning has been IPv6 only and continues to be.

Also, as far as this bug is concerned with not removing IP addresses from NICs although the GUI shows them removed, is this issue gone in Proxmox 7.0? I understand ifupdown2 is standard for 7.0.

Can you share the contents of

/etc/network/interfaces, the output of ip a and a screenshot of the network panel in the GUI? Should you have public IP addresses in use, please mask them in a way that each masked IP can still be identified uniquelyI don't think the last went through ... sorry .. trash Chome browser .. using Firefox this time

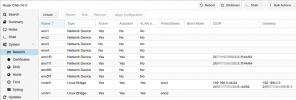

ens1f0 and ens1f1 are the 25Gb fiber NICs

ens5f0 and ens5f1 are the 10Gb copper NICs

ens1f0 and ens1f1 are the 25Gb fiber NICs

ens5f0 and ens5f1 are the 10Gb copper NICs

Attachments

Nothing new on this? We had a problem on Saturday morning from these networking issues caused the GUI telling a different story than the reality ...

Sorry for the late reply.

How did you set the IPs on the 25G NICs? According to the screenshots, the /etc/network/interfaces has none configured and that is where the GUI is getting its data from.

Is there a /etc/network/interfaces.new file? Does it contain the config you would actually like to have?

How did you set the IPs on the 25G NICs? According to the screenshots, the /etc/network/interfaces has none configured and that is where the GUI is getting its data from.

Is there a /etc/network/interfaces.new file? Does it contain the config you would actually like to have?

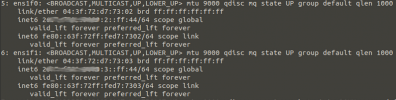

What you are saying is incorrect, please re-reference the screenshots sent and here is a piece of that in a fresh screenshot focusing in on the IP addressing on the 25Gb NICs from the 'ip a' command

The 25Gb NICs ALL of them showed IP addresses and the GUI shows the IP addressing ONLY on the 25Gb, not on the 10Gb

2xxx:xxxx:xxxx:2::ff:44 and 2xxx:xxxx:xxxx:3::ff:44 are the IP addresses for this particular node shown clearly in the screenshot

This Ceph cluster uses IPv6 only, no IPv4 and has done since inception. There have been no issues with this nor assigning IP addresses until now and until ifupdown2.

The 25Gb NICs ALL of them showed IP addresses and the GUI shows the IP addressing ONLY on the 25Gb, not on the 10Gb

2xxx:xxxx:xxxx:2::ff:44 and 2xxx:xxxx:xxxx:3::ff:44 are the IP addresses for this particular node shown clearly in the screenshot

This Ceph cluster uses IPv6 only, no IPv4 and has done since inception. There have been no issues with this nor assigning IP addresses until now and until ifupdown2.

Attachments

Ah okay, now I got it (after mixing up the 10G and 25G NICs). The same IPs were still configured on the 10G NICs after changing the network config.

That is indeed a bug. But as I explained, the GUI is showing what is configured in

When you click on "Apply Configuration", the temporary

That is where I think something went wrong. Do you remember if there were errors reported when you hit "Apply Configuration"?

To investigate this further, we would need a configuration that can reproduce this issue and then, manually running

That is indeed a bug. But as I explained, the GUI is showing what is configured in

/etc/network/interfaces, not what ip addr shows.When you click on "Apply Configuration", the temporary

/etc/network/interfaces.new file is moved to /etc/network/interfaces and ifreload -a of the ifupdown2 package is called to apply the new configuration.That is where I think something went wrong. Do you remember if there were errors reported when you hit "Apply Configuration"?

To investigate this further, we would need a configuration that can reproduce this issue and then, manually running

ifreload -a with the changed /etc/network/interfaces file might give some more details of what is going wrong.After applying the config, no, there were no errors what-so-ever .. that was the reason I treated the change over from 10 to 25 as successful

I understand the GUI is showing what is configured in /etc/network/interfaces .. the problem isn't what is in /etc/network/interfaces but rather, the reality of what 'ip a' shows and that the GUI and /etc/network/interfaces are showing something entirely different

So, at this point, essentially you are saying there isn't anything more you can do

As I said, we had a problem on Saturday .. we had to fix the IP address situation and take all the 25Gb addresses off and leave only 10 .. we are running on the 10 .. again, this is a production cluster with dozens of customers actively using it .. we can't just test things out and send them over to you guys

These are Dell R740 NVMe systems .. ZFS mirror for the OS and single Enterprise NVMe drives for the 17 OSDs per node .. Intel 10Gb NICs and Mellanox 25Gb NICs ... 10Gb switch is Dell and 25Gb fiber switch is also Dell. Networking for Ceph is in no way connected to public networks nor can be routed out to anything publicly ...

So far, I have been entirely unsuccessful in finding a way to switch our Ceph networking over from 10Gb to 25Gb and the latest problems all stem from this bug of the GUI and /etc/network/interfaces telling me something other than the real config and I am very limited in what I can do .. a few of our customers support food distribution and expect nearly 24/7 support so I can't just make changes and interrupt Ceph services in order to send something over to Proxmox

I understand the GUI is showing what is configured in /etc/network/interfaces .. the problem isn't what is in /etc/network/interfaces but rather, the reality of what 'ip a' shows and that the GUI and /etc/network/interfaces are showing something entirely different

So, at this point, essentially you are saying there isn't anything more you can do

As I said, we had a problem on Saturday .. we had to fix the IP address situation and take all the 25Gb addresses off and leave only 10 .. we are running on the 10 .. again, this is a production cluster with dozens of customers actively using it .. we can't just test things out and send them over to you guys

These are Dell R740 NVMe systems .. ZFS mirror for the OS and single Enterprise NVMe drives for the 17 OSDs per node .. Intel 10Gb NICs and Mellanox 25Gb NICs ... 10Gb switch is Dell and 25Gb fiber switch is also Dell. Networking for Ceph is in no way connected to public networks nor can be routed out to anything publicly ...

So far, I have been entirely unsuccessful in finding a way to switch our Ceph networking over from 10Gb to 25Gb and the latest problems all stem from this bug of the GUI and /etc/network/interfaces telling me something other than the real config and I am very limited in what I can do .. a few of our customers support food distribution and expect nearly 24/7 support so I can't just make changes and interrupt Ceph services in order to send something over to Proxmox

No, the machines couldn't be left in that state .. I had to remove all IP addressing for 25Gb NICs and re-apply IP addresses to the 10Gb so that now only the 10Gb NICs on the Ceph nodes have addressing.So the machines are still in this state? What doesip routesay? Do you have routes for both NICs or only for one?

What is the output ofapt-cache policy ifupdown2?

Also, as far as 'ip route' goes, it wouldn't show anything as there are no routes since this is a sealed off network with no access to anywhere. Node to node on a single layer 2 network. The switch is connected to no other switch.

See attached for 'apt-cache policy ifupdown2' output

Attachments

Ok .. an update on this .. we just ordered Intel 25Gb cards (two for testing) and installed them in a couple of the servers

I was able to assign IPs and copy large files back and forth. I have been unable to do this with the Mellanox cards

The Mellanox cards we have installed are the following:

Mellanox Connect X4 25Gb MCX-4121A-ACAT

Can you tell us whether these are supported by Proxmox 6.4? Would they be supported on Proxmox 7.0? Do you not support Mellanox at all or only certain models of NICS from them on 25Gb fiber SPF28?

I was able to assign IPs and copy large files back and forth. I have been unable to do this with the Mellanox cards

The Mellanox cards we have installed are the following:

Mellanox Connect X4 25Gb MCX-4121A-ACAT

Can you tell us whether these are supported by Proxmox 6.4? Would they be supported on Proxmox 7.0? Do you not support Mellanox at all or only certain models of NICS from them on 25Gb fiber SPF28?

By the way, the Intel NICs we installed to test with are these

Intel XXV710 Dual Port 25GbE SFP28/SFP+ PCIe Adapter

Are these fully supported on Proxmox 6.4 and 7.0? at full 25Gb speeds and functionality?

I ask because if it turns out we have to remove the Mellanox cards from all servers and replace with Intel 25Gb NICs, we don't want to go through this again so would like to be sure that these NICs are the correct ones to go with. Thanks so much in advance.

Intel XXV710 Dual Port 25GbE SFP28/SFP+ PCIe Adapter

Are these fully supported on Proxmox 6.4 and 7.0? at full 25Gb speeds and functionality?

I ask because if it turns out we have to remove the Mellanox cards from all servers and replace with Intel 25Gb NICs, we don't want to go through this again so would like to be sure that these NICs are the correct ones to go with. Thanks so much in advance.

Last edited: