1. for 8 nodes if's can move to 2/2? what is the logical behind that?

move nodes also say more percentage of something going wrong, no?

I don't quite understand your question. The size 2 means, that Ceph only has two copies of a PG (holding objects). If one disks fails, then the risk that another disk with the same PG fails, before the second copy is recovered, is high enough. Somewhere on the Ceph mailling list, was a calculation floating around (AFAIR ~11%). It all depends on the time it takes to recover and how likely it is to loose a disk.

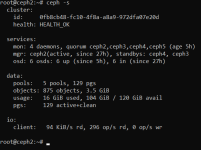

2. it's killing me, from 6 nodes of 2x960GB for each I have total usage of 1.92TB usage for all the nodes together

it's look like there is kind of RAID1 for both OSDs on each node and than additional replication for additional 2 nodes (total 3 copies)

so not make sense to move 2/2 replication if anyway I have the OSD replication for each node?

No, no RAID 1. The PGs are distributed by CRUSH (default setting) on node level. Eg. a PG will be on a disk on node 1 and on node 6.

=== size 3 ===

960 * 0.8 = 768 GB

768 * 12 = 9216 GB (80% fill of OSD)

9216 / 3 = 3072 GB (space after replication)

3072 / 6 = 512 GB (space per host)

512 * 2 = 1024 GB (data that needs to be moved after 2x nodes fail)

3072 - 1024 = 2048 GB (usable space)

2048 * 0.8 = 1638.4 GB (80% of usable space, 20% for growth in degraded state)

=== size 2 ===

960 * 0.8 = 768 GB

768 * 12 = 9216 GB (80% fill of OSD)

9216 / 2 = 4608 GB (space after replication)

4608 / 6 = 768 GB (space per host)

768 * 2 = 1536 GB (data that needs to be moved after 2x nodes fail)

4608 - 1536 = 3072 GB (usable space)

3072 * 0.8 = 2457.6 GB (80% of usable space, 20% for growth in degraded state)

The difference is one disk 819.2 GB. The calculation is just an example.

To say, this is a simple calculation and might not take everything into account, but hopefully illustrates a little what ceph is doing with all that space. You have to weigh, if you want more data safety or space. Also keep in mind that this calculation doesn't take into account how fast a recovery can be done and hence not representing the risk of a second disk failing.