Hi, we take snaphost of vms every night at midnight and remove the 16th snapshot. After removing snapshot all pgs go in snaptrim status and this goes for 9/10 hours and the vms are unusable until it finish.

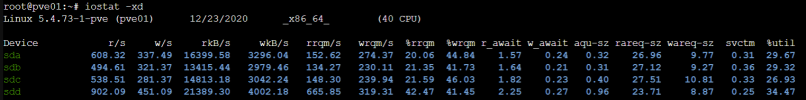

another things: we added 3 disks, one for each node last week and the new disks are not gpt and have a highest used space on the osds.

the cluster was created on version 5.2 and the latest disk added with 6.1 or 6.2

could be this the problem?

root@pve01:~# ceph -s

cluster:

id: 7ced7402-a929-461a-bd40-53f863fa46ab

health: HEALTH_OK

services:

mon: 3 daemons, quorum pve02,pve03,pve01 (age 5d)

mgr: pve01(active, since 5d), standbys: pve03, pve02

osd: 12 osds: 12 up (since 5d), 12 in (since 5d)

data:

pools: 1 pools, 512 pgs

objects: 4.58M objects, 8.2 TiB

usage: 27 TiB used, 15 TiB / 42 TiB avail

pgs: 486 active+clean+snaptrim_wait

24 active+clean+snaptrim

2 active+clean+scrubbing+deep+snaptrim_wait

io:

client: 3.1 MiB/s rd, 3.6 MiB/s wr, 2.10k op/s rd, 166 op/s wr

cluster:

id: 7ced7402-a929-461a-bd40-53f863fa46ab

health: HEALTH_OK

services:

mon: 3 daemons, quorum pve02,pve03,pve01 (age 5d)

mgr: pve01(active, since 5d), standbys: pve03, pve02

osd: 12 osds: 12 up (since 5d), 12 in (since 5d)

data:

pools: 1 pools, 512 pgs

objects: 4.58M objects, 8.2 TiB

usage: 27 TiB used, 15 TiB / 42 TiB avail

pgs: 486 active+clean+snaptrim_wait

24 active+clean+snaptrim

2 active+clean+scrubbing+deep+snaptrim_wait

io:

client: 3.1 MiB/s rd, 3.6 MiB/s wr, 2.10k op/s rd, 166 op/s wr

another things: we added 3 disks, one for each node last week and the new disks are not gpt and have a highest used space on the osds.

the cluster was created on version 5.2 and the latest disk added with 6.1 or 6.2

could be this the problem?

Last edited: