HI All,

simple set up 3 nodes each node has 1 SSD and 1 HDD connected via 10GB network.

I stutdown (via GUI) and moved one node from one location to another with a down time of about 15 minutes.

I had moved all the VM's from that node to other nodes.

When I started the node it all seemed to start fine and Ceph went to work but then it stopped and showed the bellow error.

I thought it would fix itself after a day or two but the message hasn't changed for 5 days.

all the VM's are running fine.

all the monitors and nodes are on the same versions

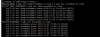

looking in the log this indicates the problem

osd 2, 4 and 0 are all the SSD drives which are then cache tiered to the HDD.

what command should I run to repair the problem.

thanks

Damon

simple set up 3 nodes each node has 1 SSD and 1 HDD connected via 10GB network.

I stutdown (via GUI) and moved one node from one location to another with a down time of about 15 minutes.

I had moved all the VM's from that node to other nodes.

When I started the node it all seemed to start fine and Ceph went to work but then it stopped and showed the bellow error.

I thought it would fix itself after a day or two but the message hasn't changed for 5 days.

all the VM's are running fine.

all the monitors and nodes are on the same versions

looking in the log this indicates the problem

osd 2, 4 and 0 are all the SSD drives which are then cache tiered to the HDD.

what command should I run to repair the problem.

thanks

Damon