Hello everyone, I would like share my experience and have your opinion regarding the use of Ceph with RBD or KRBD.

I have noticed a significant performance increase using KRBD, but I am unsure whether it is reliable, especially for continuous and long-term use.

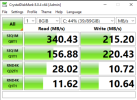

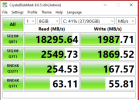

I am attaching screenshots taken from a Windows VM running CrystalDiskMark. The one with lower performance is running with librbd, while the one with (significantly) higher performance is running with KRBD.

My setup is a small Proxmox cluster with Ceph, consisting of three Dell 730 servers, each with 10 SAS HDDs. The controllers are PERC H730, reconfigured in mixed mode (RAID1 with 2 HDDs for boot, and 10 HDDs in HBA mode). Both the public network and the Ceph network use a 2x10 Gbit lacp layer 3+4 bonded connection per host.

The VMs used for testing have the VirtIO single controller set with write-back cache.

During testing, I noticed that with the KRBD VM, at certain moments, it seemed completely frozen, and even the console was unresponsive—at least until the test finished. Then, everything resumed working normally. So, I am unsure whether this issue is caused by the host, potentially affecting other VMs as well, or if it is a problem limited to the guest itself.

Thanks all in advance for your advices and suggestions

I have noticed a significant performance increase using KRBD, but I am unsure whether it is reliable, especially for continuous and long-term use.

I am attaching screenshots taken from a Windows VM running CrystalDiskMark. The one with lower performance is running with librbd, while the one with (significantly) higher performance is running with KRBD.

My setup is a small Proxmox cluster with Ceph, consisting of three Dell 730 servers, each with 10 SAS HDDs. The controllers are PERC H730, reconfigured in mixed mode (RAID1 with 2 HDDs for boot, and 10 HDDs in HBA mode). Both the public network and the Ceph network use a 2x10 Gbit lacp layer 3+4 bonded connection per host.

The VMs used for testing have the VirtIO single controller set with write-back cache.

During testing, I noticed that with the KRBD VM, at certain moments, it seemed completely frozen, and even the console was unresponsive—at least until the test finished. Then, everything resumed working normally. So, I am unsure whether this issue is caused by the host, potentially affecting other VMs as well, or if it is a problem limited to the guest itself.

Thanks all in advance for your advices and suggestions

Attachments

Last edited: