Hello,

I'm facing poor write (IOPS) performance (TPS as well) on Linux VM with MongoDB Apps.

Cluster:

Nodes: 3

Hardware: HP Gen11

Disks: 4 NVME PM1733 Enterprise NVME ## With latest firmware driver.

Network: BCM57414 NetXtreme-E / 25G

PVE Version: 8.2.4 , 6.8.8-2-pve

Ceph:

Version: 18.2.2 Reef.

4 OSD's per node.

PG: 512

Replica 2/1

Additional ceph config:

bluestore_min_alloc_size_ssd = 4096 ## tried also 8K

osd_memory_target = 8G

osd_op_num_threads_per_shard_ssd = 8

OSD disks cache configured as "write through" ## Ceph recommendation for better latency.

Apply \ Commit latency below 1MS.

Network:

MTU: 8191 ## Maximim value supported.

TX \ RX Ring: 2046 ## Maximum value supported.

Iperf3 results: 24 gig between all Ceph nodes:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 28.3 GBytes 24.3 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 28.3 GBytes 24.3 Gbits/sec receiver

VM:

Rocky 9 (tried also ubuntu 22):

boot: order=scsi0

cores: 32

cpu: host

memory: 4096

name: test-fio-2

net0: virtio=BC:24:11:F9:51:1A,bridge=vmbr2

numa: 0

ostype: l26

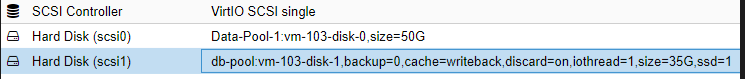

scsi0: Data-Pool-1:vm-102-disk-0,size=50G ## OS

scsihw: virtio-scsi-pci

smbios1: uuid=5cbef167-8339-4e76-b412-4fea905e87cd

sockets: 2

tags: templatae

virtio0: sa:vm-103-disk-0,backup=0,cache=writeback,discard=on,iothread=1,size=33G ### Local disk - same NVME

virtio2: db-pool:vm-103-disk-0,backup=0,cache=writeback,discard=on,iothread=1,size=34G ### Ceph - same NVME

virtio23 db-pool:vm-104-disk-0,backup=0,cache=unsafe,discard=on,iothread=1,size=35G ### Ceph - same NVME

Disk1: Local nvme with iothread

Disk2: Ceph disk with Write Cache with iothread

Disk3: Ceph disk with Write Cache Unsafe with iothread

I've made FIO test in one SSH session and IOSTAT on second session:

fio --filename=/dev/vda --sync=1 --rw=write --bs=64k --numjobs=1 --iodepth=1 --runtime=15 --time_based --name=fioa

Results:

Disk1 - Local nvme:

WRITE: bw=74.4MiB/s (78.0MB/s), 74.4MiB/s-74.4MiB/s (78.0MB/s-78.0MB/s), io=1116MiB (1170MB), run=15001-15001msec

TPS: 2500

DIsk2 - Ceph disk with Write Cache:

WRITE: bw=18.6MiB/s (19.5MB/s), 18.6MiB/s-18.6MiB/s (19.5MB/s-19.5MB/s), io=279MiB (292MB), run=15002-15002msec

TPS: 550-600

Disk3 - Ceph disk with Write Cache Unsafe:

WRITE: bw=177MiB/s (186MB/s), 177MiB/s-177MiB/s (186MB/s-186MB/s), io=2658MiB (2788MB), run=15001-15001msec

TPS: 5000-8000

The VM disk cache configured with "Write Cache"

The queue scheduler configured with "none" (Ceph OSD disk as well).

Any suggestion please how to improve the write speed (write cache or none)?

How can find the bottleneck?

I will glad to add more information as needed.

Many Thanks.

I'm facing poor write (IOPS) performance (TPS as well) on Linux VM with MongoDB Apps.

Cluster:

Nodes: 3

Hardware: HP Gen11

Disks: 4 NVME PM1733 Enterprise NVME ## With latest firmware driver.

Network: BCM57414 NetXtreme-E / 25G

PVE Version: 8.2.4 , 6.8.8-2-pve

Ceph:

Version: 18.2.2 Reef.

4 OSD's per node.

PG: 512

Replica 2/1

Additional ceph config:

bluestore_min_alloc_size_ssd = 4096 ## tried also 8K

osd_memory_target = 8G

osd_op_num_threads_per_shard_ssd = 8

OSD disks cache configured as "write through" ## Ceph recommendation for better latency.

Apply \ Commit latency below 1MS.

Network:

MTU: 8191 ## Maximim value supported.

TX \ RX Ring: 2046 ## Maximum value supported.

Iperf3 results: 24 gig between all Ceph nodes:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 28.3 GBytes 24.3 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 28.3 GBytes 24.3 Gbits/sec receiver

VM:

Rocky 9 (tried also ubuntu 22):

boot: order=scsi0

cores: 32

cpu: host

memory: 4096

name: test-fio-2

net0: virtio=BC:24:11:F9:51:1A,bridge=vmbr2

numa: 0

ostype: l26

scsi0: Data-Pool-1:vm-102-disk-0,size=50G ## OS

scsihw: virtio-scsi-pci

smbios1: uuid=5cbef167-8339-4e76-b412-4fea905e87cd

sockets: 2

tags: templatae

virtio0: sa:vm-103-disk-0,backup=0,cache=writeback,discard=on,iothread=1,size=33G ### Local disk - same NVME

virtio2: db-pool:vm-103-disk-0,backup=0,cache=writeback,discard=on,iothread=1,size=34G ### Ceph - same NVME

virtio23 db-pool:vm-104-disk-0,backup=0,cache=unsafe,discard=on,iothread=1,size=35G ### Ceph - same NVME

Disk1: Local nvme with iothread

Disk2: Ceph disk with Write Cache with iothread

Disk3: Ceph disk with Write Cache Unsafe with iothread

I've made FIO test in one SSH session and IOSTAT on second session:

fio --filename=/dev/vda --sync=1 --rw=write --bs=64k --numjobs=1 --iodepth=1 --runtime=15 --time_based --name=fioa

Results:

Disk1 - Local nvme:

WRITE: bw=74.4MiB/s (78.0MB/s), 74.4MiB/s-74.4MiB/s (78.0MB/s-78.0MB/s), io=1116MiB (1170MB), run=15001-15001msec

TPS: 2500

DIsk2 - Ceph disk with Write Cache:

WRITE: bw=18.6MiB/s (19.5MB/s), 18.6MiB/s-18.6MiB/s (19.5MB/s-19.5MB/s), io=279MiB (292MB), run=15002-15002msec

TPS: 550-600

Disk3 - Ceph disk with Write Cache Unsafe:

WRITE: bw=177MiB/s (186MB/s), 177MiB/s-177MiB/s (186MB/s-186MB/s), io=2658MiB (2788MB), run=15001-15001msec

TPS: 5000-8000

The VM disk cache configured with "Write Cache"

The queue scheduler configured with "none" (Ceph OSD disk as well).

Any suggestion please how to improve the write speed (write cache or none)?

How can find the bottleneck?

I will glad to add more information as needed.

Many Thanks.

Last edited: