Hallo liebe Community,

ich habe vor ca. 4 Wochen von iSCSI (auf einem NAS) auf Ceph (RBD) umgestellt. Die Migration lief ohne Probleme.

Mein Cluster:

3 Nodes

pro Node 2x 1TB SATA SSD

als Ceph Network 10GBit/s

Heute habe ich eine 300GB VM Disk auf den Ceph Pool von iSCSI (auf einem NAS) migriert.

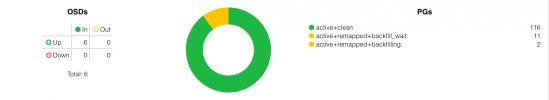

Ca. 10 min. später erschien folgendes (Screenshot 1)

Außerdem wird angezeigt, das Daten synchronisiert werden. Allerdings hängt der Fortschritt bei 0% (Screenshot 2).

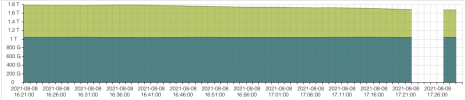

Die Größe des Pools sinkt kontinuierlich (Screenshot 3).

Was kann ich hier machen?

Viele Grüße

ich habe vor ca. 4 Wochen von iSCSI (auf einem NAS) auf Ceph (RBD) umgestellt. Die Migration lief ohne Probleme.

Mein Cluster:

3 Nodes

pro Node 2x 1TB SATA SSD

als Ceph Network 10GBit/s

Heute habe ich eine 300GB VM Disk auf den Ceph Pool von iSCSI (auf einem NAS) migriert.

Ca. 10 min. später erschien folgendes (Screenshot 1)

Außerdem wird angezeigt, das Daten synchronisiert werden. Allerdings hängt der Fortschritt bei 0% (Screenshot 2).

Die Größe des Pools sinkt kontinuierlich (Screenshot 3).

Was kann ich hier machen?

Viele Grüße