hi there.

proxmox with 10 nodes upgrade from 7.0 (pacific) to 7.4 (pacific0, it was completed without problems, vms are fine.

so next is to upgrade pacific to quincy.

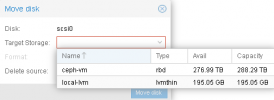

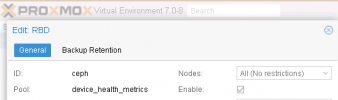

yet, i have one of my vm have a problem. the device_health_metrics shall be changed to .mgr during the upgrade.

Is any fix for it?

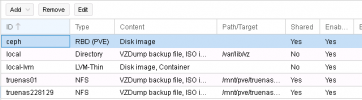

I also notice /etcc/pve/storage.cfg still have a line .

Should it be rename or deleted? Thank you in advanced.

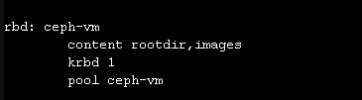

rbd: ceph-vm

content images

krbd 0

pool device_health_metrics

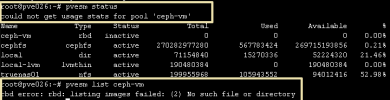

# qm start 302

kvm: -drive file=rbd:device_health_metrics/vm-302-disk-0:conf=/etc/pve/ceph.conf:id=admin:keyring=/etc/pve/priv/ceph/ceph-vm.keyring,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on: error opening pool device_health_metrics: No such file or directory

start failed: QEMU exited with code 1

# ceph -s

cluster:

id: 1fe1245d-4934-439f-bb8c-5cc650efe185

health: HEALTH_OK

services:

mon: 4 daemons, quorum pve026,pve025,pve027,pve029 (age 39m)

mgr: pve025(active, since 79m), standbys: pve026, pve029, pve027

osd: 120 osds: 120 up (since 92s), 120 in (since 4d)

data:

pools: 1 pools, 128 pgs

objects: 2.75M objects, 10 TiB

usage: 29 TiB used, 844 TiB / 873 TiB avail

pgs: 128 active+clean

io:

client: 255 KiB/s wr, 0 op/s rd, 14 op/s wr

# ceph versions

{

"mon": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 4

},

"mgr": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 4

},

"osd": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 120

},

"mds": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 2

},

"overall": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 130

}

}

# ceph mon dump|grep min

dumped monmap epoch 13

min_mon_release 17 (quincy)

# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.107-2-pve)

pve-manager: 7.4-13 (running version: 7.4-13/46c37d9c)

pve-kernel-5.15: 7.4-3

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph: 17.2.6-pve1

ceph-fuse: 17.2.6-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.2-1

proxmox-backup-file-restore: 2.4.2-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.1-1

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-4

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-4

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

proxmox with 10 nodes upgrade from 7.0 (pacific) to 7.4 (pacific0, it was completed without problems, vms are fine.

so next is to upgrade pacific to quincy.

yet, i have one of my vm have a problem. the device_health_metrics shall be changed to .mgr during the upgrade.

Is any fix for it?

I also notice /etcc/pve/storage.cfg still have a line .

Should it be rename or deleted? Thank you in advanced.

rbd: ceph-vm

content images

krbd 0

pool device_health_metrics

# qm start 302

kvm: -drive file=rbd:device_health_metrics/vm-302-disk-0:conf=/etc/pve/ceph.conf:id=admin:keyring=/etc/pve/priv/ceph/ceph-vm.keyring,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on: error opening pool device_health_metrics: No such file or directory

start failed: QEMU exited with code 1

# ceph -s

cluster:

id: 1fe1245d-4934-439f-bb8c-5cc650efe185

health: HEALTH_OK

services:

mon: 4 daemons, quorum pve026,pve025,pve027,pve029 (age 39m)

mgr: pve025(active, since 79m), standbys: pve026, pve029, pve027

osd: 120 osds: 120 up (since 92s), 120 in (since 4d)

data:

pools: 1 pools, 128 pgs

objects: 2.75M objects, 10 TiB

usage: 29 TiB used, 844 TiB / 873 TiB avail

pgs: 128 active+clean

io:

client: 255 KiB/s wr, 0 op/s rd, 14 op/s wr

# ceph versions

{

"mon": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 4

},

"mgr": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 4

},

"osd": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 120

},

"mds": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 2

},

"overall": {

"ceph version 17.2.6 (995dec2cdae920da21db2d455e55efbc339bde24) quincy (stable)": 130

}

}

# ceph mon dump|grep min

dumped monmap epoch 13

min_mon_release 17 (quincy)

# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.107-2-pve)

pve-manager: 7.4-13 (running version: 7.4-13/46c37d9c)

pve-kernel-5.15: 7.4-3

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph: 17.2.6-pve1

ceph-fuse: 17.2.6-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.2-1

proxmox-backup-file-restore: 2.4.2-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.1-1

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-4

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-4

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1