Morning folks ive finaly got round to upgrading our hyperconverged cluster from v 5 through v7 and apart from ceph to v quincy which involves me changing from filestore to blue store i have had no issues.

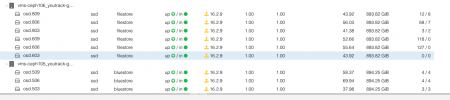

I do however have a yellow icon in the GUI which looks like an ujpgrade icon maybe?

CAn anyone shed any light

I do however have a yellow icon in the GUI which looks like an ujpgrade icon maybe?

CAn anyone shed any light