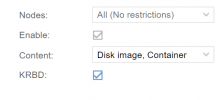

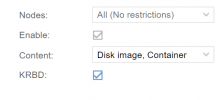

We recently enable kRBD (tick the KRBD box in storage configuration) for our running cluster. Faster IO was the main reason, We record 30% gain in reads/writes in all VM running in writeback cache mode.

But then a couple of VMs disks needed to be resized. In both cases disks went to 0 bytes.

Please note I know PVE 6.4 is EOL, We are upgrading progressively all cluster nodes.

#VM info

There's some other posts in this forum about the same issue and they all related on KRBD usage:

- https://forum.proxmox.com/threads/wiederherstellen-eines-images-aus-ceph-rbd-storage.68279/

- https://forum.proxmox.com/threads/ceph-disk-0-bytes-after-hard-resize.49495/

Any pointer will be appreciated.

But then a couple of VMs disks needed to be resized. In both cases disks went to 0 bytes.

Code:

Apr 25 17:53:47 pe03-pve pvedaemon[2615822]: <root@pam> update VM 1080: -delete sshkeys

Apr 25 17:53:47 pe03-pve pvedaemon[2615822]: cannot delete 'sshkeys' - not set in current configuration!

Apr 25 17:53:48 pe03-pve kernel: rbd6: p1 p2

Apr 25 17:53:48 pe03-pve kernel: rbd: rbd6: capacity 53687091200 features 0x3d

Apr 25 17:53:48 pe03-pve pvedaemon[2781571]: <root@pam> update VM 1080: resize --disk scsi0 --size +50G

Apr 25 17:53:48 pe03-pve kernel: rbd6: detected capacity change from 53687091200 to 107374182400

Apr 25 17:53:55 pe03-pve pvestatd[3498]: VM 1080 qmp command failed - VM 1080 qmp command 'query-proxmox-support' failed - got timeout

Apr 25 17:53:55 pe03-pve pvestatd[3498]: status update time (6.805 seconds)

Apr 25 17:54:00 pe03-pve systemd[1]: Starting Proxmox VE replication runner...

Apr 25 17:54:01 pe03-pve systemd[1]: pvesr.service: Succeeded.

Apr 25 17:54:01 pe03-pve systemd[1]: Started Proxmox VE replication runner.

Apr 25 17:54:06 pe03-pve pvestatd[3498]: VM 1080 qmp command failed - VM 1080 qmp command 'query-proxmox-support' failed - unable to connect to VM 1080 qmp socket - timeout after 31 retries

Apr 25 17:54:06 pe03-pve pvestatd[3498]: status update time (6.873 seconds)

Apr 25 17:54:15 pe03-pve pvestatd[3498]: VM 1080 qmp command failed - VM 1080 qmp command 'query-proxmox-support' failed - unable to connect to VM 1080 qmp socket - timeout after 31 retries

Apr 25 17:54:16 pe03-pve pvestatd[3498]: status update time (6.814 seconds)

Apr 25 17:54:25 pe03-pve kernel: rbd6: detected capacity change from 107374182400 to 0

Apr 25 17:54:25 pe03-pve pvestatd[3498]: status update time (6.276 seconds)Please note I know PVE 6.4 is EOL, We are upgrading progressively all cluster nodes.

Code:

# pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.203-1-pve)

pve-manager: 6.4-15 (running version: 6.4-15/af7986e6)

pve-kernel-5.4: 6.4-20

pve-kernel-helper: 6.4-20

pve-kernel-5.4.203-1-pve: 5.4.203-1

pve-kernel-5.4.128-1-pve: 5.4.128-2

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph: 15.2.17-pve1~bpo10

ceph-fuse: 15.2.17-pve1~bpo10

corosync: 3.1.5-pve2~bpo10+1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.22-pve2~bpo10+1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-5

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.14-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-2

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.3-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.7-pve1#VM info

Code:

# qm config 1080

agent: 1

autostart: 1

boot: order=scsi0;ide2;net0

cipassword: **********

ciuser: root

cores: 1

cpu: host

description:

hotplug: disk,network,usb

kvm: 1

memory: 2048

name: svr.******.com

net0: e1000=06:0F:AD:B4:69:4D,bridge=vmbr0,firewall=1,rate=2.5,tag=80

numa: 0

onboot: 1

ostype: l26

protection: 1

scsi0: vmroot:vm-1080-disk-0,cache=writeback,discard=on,format=raw,mbps_rd=50,mbps_wr=50,size=100G,ssd=1

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=e88abd65-774d-4414-ab48-90b38a38d76d

sockets: 1

vga: std

vmgenid: 03b9dac3-dee8-4796-b46a-c069119b7770There's some other posts in this forum about the same issue and they all related on KRBD usage:

- https://forum.proxmox.com/threads/wiederherstellen-eines-images-aus-ceph-rbd-storage.68279/

- https://forum.proxmox.com/threads/ceph-disk-0-bytes-after-hard-resize.49495/

Any pointer will be appreciated.