Hi All

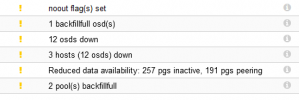

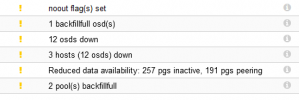

I have done the ceph upgrade on 2 of our clusters according to Proxmox Nautilus to Octopus upgrade procedure. The first cluster works perfect without any problems but the second cluster doesn't work. The upgrade completed until the point where you restart the OSD's, it then stopped with the OSD upgrade and shows that 3 hosts out of the 4 are down with their OSD's, the hosts are up they have access to each other and the osd services are running. During the upgrade I got 1 backfillfull osd(s) and 2 pool(s) backfillfull errors. I have set the full and backfill ratios but the messages stay the same.

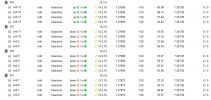

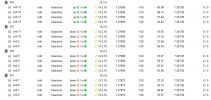

The OSD's seems to be unresponsive if I shutdown the 1 host which OSD's are up then it shows the OSD's are still up. The monitor and manager shows the host going down.

This is the ceph.conf :

[global]

auth client required = cephx

auth cluster required = cephx

auth service required = cephx

cluster network = 10.10.10.0/24

fsid = 3d6cfbaa-c7ac-447a-843d-9795f9ab4276

mon allow pool delete = true

osd journal size = 5120

osd pool default min size = 2

osd pool default size = 3

public network = 10.10.10.0/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[osd]

osd max scrubs = 1

osd scrub begin hour = 19

osd scrub end hour = 5

[mon.W2]

host = W2

mon addr = 10.10.10.82:6789

[mon.W4]

host = W4

mon addr = 10.10.10.84:6789

[mon.W1]

host = W1

mon addr = 10.10.10.81:6789

[mon.W3]

host = W3

mon addr = 10.10.10.83:6789

The crushmap :

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class hdd

device 1 osd.1 class hdd

device 2 osd.2 class hdd

device 3 osd.3 class hdd

device 4 osd.4 class hdd

device 5 osd.5 class hdd

device 6 osd.6 class hdd

device 7 osd.7 class hdd

device 8 osd.8 class hdd

device 9 osd.9 class hdd

device 10 osd.10 class hdd

device 11 osd.11 class hdd

device 12 osd.12 class hdd

device 13 osd.13 class hdd

device 14 osd.14 class hdd

device 15 osd.15 class hdd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

# buckets

host W2 {

id -5 # do not change unnecessarily

id -6 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.2 weight 7.277

item osd.3 weight 7.277

item osd.8 weight 7.277

item osd.9 weight 7.277

}

host W3 {

id -7 # do not change unnecessarily

id -8 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.4 weight 7.277

item osd.5 weight 7.277

item osd.10 weight 7.277

item osd.11 weight 7.277

}

host W4 {

id -9 # do not change unnecessarily

id -10 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.12 weight 7.277

item osd.13 weight 7.277

item osd.14 weight 7.277

item osd.15 weight 7.277

}

host W1 {

id -3 # do not change unnecessarily

id -4 class hdd # do not change unnecessarily

# weight 29.107

alg straw2

hash 0 # rjenkins1

item osd.0 weight 7.277

item osd.1 weight 7.277

item osd.6 weight 7.277

item osd.7 weight 7.277

}

root default {

id -1 # do not change unnecessarily

id -2 class hdd # do not change unnecessarily

# weight 116.428

alg straw2

hash 0 # rjenkins1

item W2 weight 29.107

item W3 weight 29.107

item W4 weight 29.107

item W1 weight 29.107

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map

Any advise will be appreciated.

I have done the ceph upgrade on 2 of our clusters according to Proxmox Nautilus to Octopus upgrade procedure. The first cluster works perfect without any problems but the second cluster doesn't work. The upgrade completed until the point where you restart the OSD's, it then stopped with the OSD upgrade and shows that 3 hosts out of the 4 are down with their OSD's, the hosts are up they have access to each other and the osd services are running. During the upgrade I got 1 backfillfull osd(s) and 2 pool(s) backfillfull errors. I have set the full and backfill ratios but the messages stay the same.

The OSD's seems to be unresponsive if I shutdown the 1 host which OSD's are up then it shows the OSD's are still up. The monitor and manager shows the host going down.

This is the ceph.conf :

[global]

auth client required = cephx

auth cluster required = cephx

auth service required = cephx

cluster network = 10.10.10.0/24

fsid = 3d6cfbaa-c7ac-447a-843d-9795f9ab4276

mon allow pool delete = true

osd journal size = 5120

osd pool default min size = 2

osd pool default size = 3

public network = 10.10.10.0/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[osd]

osd max scrubs = 1

osd scrub begin hour = 19

osd scrub end hour = 5

[mon.W2]

host = W2

mon addr = 10.10.10.82:6789

[mon.W4]

host = W4

mon addr = 10.10.10.84:6789

[mon.W1]

host = W1

mon addr = 10.10.10.81:6789

[mon.W3]

host = W3

mon addr = 10.10.10.83:6789

The crushmap :

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class hdd

device 1 osd.1 class hdd

device 2 osd.2 class hdd

device 3 osd.3 class hdd

device 4 osd.4 class hdd

device 5 osd.5 class hdd

device 6 osd.6 class hdd

device 7 osd.7 class hdd

device 8 osd.8 class hdd

device 9 osd.9 class hdd

device 10 osd.10 class hdd

device 11 osd.11 class hdd

device 12 osd.12 class hdd

device 13 osd.13 class hdd

device 14 osd.14 class hdd

device 15 osd.15 class hdd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

# buckets

host W2 {

id -5 # do not change unnecessarily

id -6 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.2 weight 7.277

item osd.3 weight 7.277

item osd.8 weight 7.277

item osd.9 weight 7.277

}

host W3 {

id -7 # do not change unnecessarily

id -8 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.4 weight 7.277

item osd.5 weight 7.277

item osd.10 weight 7.277

item osd.11 weight 7.277

}

host W4 {

id -9 # do not change unnecessarily

id -10 class hdd # do not change unnecessarily

# weight 29.108

alg straw2

hash 0 # rjenkins1

item osd.12 weight 7.277

item osd.13 weight 7.277

item osd.14 weight 7.277

item osd.15 weight 7.277

}

host W1 {

id -3 # do not change unnecessarily

id -4 class hdd # do not change unnecessarily

# weight 29.107

alg straw2

hash 0 # rjenkins1

item osd.0 weight 7.277

item osd.1 weight 7.277

item osd.6 weight 7.277

item osd.7 weight 7.277

}

root default {

id -1 # do not change unnecessarily

id -2 class hdd # do not change unnecessarily

# weight 116.428

alg straw2

hash 0 # rjenkins1

item W2 weight 29.107

item W3 weight 29.107

item W4 weight 29.107

item W1 weight 29.107

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map

Any advise will be appreciated.