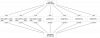

I've just deployed the topology which looks something like below. I'm getting what I believe is slow throughput to ceph.

This is a new cluster so I can destroy everything and redeploy.

The rados benchmarks show writes at around 160Mpbs. The osd are all enterprise 10k SAS drives. They are physically connected to a raid controller as that is how the chassis is built (UCS hardware) but the disks are are set to JBOD (no raid enabled).

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 192.168.0.4/24

fsid = REDATED

mon_allow_pool_delete = true

mon_host = 10.237.195.4 10.237.195.6 10.237.195.5

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.237.195.4/24This is a new cluster so I can destroy everything and redeploy.

The rados benchmarks show writes at around 160Mpbs. The osd are all enterprise 10k SAS drives. They are physically connected to a raid controller as that is how the chassis is built (UCS hardware) but the disks are are set to JBOD (no raid enabled).

Code:

root@proxmox-ceph-2:~# rados bench 60 write -p ceph

hints = 1

Maintaining 16 concurrent writes of 4194304 bytes to objects of size 4194304 for up to 60 seconds or 0 objects

Object prefix: benchmark_data_proxmox-ceph-2_42174

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 49 33 131.992 132 0.27763 0.364652

2 16 95 79 157.986 184 0.877338 0.348108

3 16 134 118 157.318 156 0.0923754 0.351161

4 16 176 160 159.984 168 1.07937 0.368926

5 16 213 197 157.586 148 0.0747114 0.381018

6 16 252 236 157.319 156 0.258982 0.385571

7 16 293 277 158.271 164 0.258472 0.381039

8 16 345 329 164.484 208 0.105826 0.373999

9 16 388 372 165.317 172 0.309857 0.374067

10 16 429 413 165.183 164 1.23219 0.375987

11 16 473 457 166.165 176 0.64705 0.373996

12 16 510 494 164.649 148 0.33246 0.374126

13 16 552 536 164.905 168 0.663583 0.37674

14 16 587 571 163.125 140 0.280276 0.37916

15 16 628 612 163.182 164 0.0547644 0.380405

16 16 671 655 163.732 172 0.250085 0.3795

17 16 719 703 165.394 192 0.245245 0.381012

18 16 759 743 165.093 160 0.402753 0.377789

19 16 806 790 166.297 188 0.207021 0.376568

2019-08-14 16:54:28.356330 min lat: 0.0371012 max lat: 1.81811 avg lat: 0.376707

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

20 16 853 837 167.381 188 0.0746407 0.376707

21 16 895 879 167.409 168 0.0556193 0.378463

22 16 945 929 168.89 200 0.445773 0.374709

23 16 982 966 167.981 148 0.473452 0.3767

24 16 1015 999 166.481 132 0.424303 0.377674

25 16 1061 1045 167.181 184 0.253189 0.376418

26 16 1100 1084 166.75 156 1.37439 0.377822

27 16 1155 1139 168.721 220 0.973974 0.375853

28 16 1196 1180 168.551 164 0.202659 0.374766

29 16 1239 1223 168.67 172 0.0546775 0.376256

30 16 1284 1268 169.046 180 0.928301 0.375133

31 16 1326 1310 169.012 168 0.20854 0.374534

32 16 1363 1347 168.355 148 0.465132 0.375474

33 16 1401 1385 167.859 152 1.67556 0.376472

34 16 1435 1419 166.921 136 1.55922 0.378086

35 16 1472 1456 166.38 148 0.144762 0.380992

36 16 1514 1498 166.424 168 0.163422 0.379808

37 16 1554 1538 166.25 160 0.558226 0.381163

38 16 1606 1590 167.348 208 0.169015 0.378813

39 16 1644 1628 166.954 152 0.911788 0.37994

2019-08-14 16:54:48.358881 min lat: 0.0371012 max lat: 1.81811 avg lat: 0.379431

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

40 16 1690 1674 167.38 184 0.184591 0.379431

41 16 1726 1710 166.809 144 0.0904877 0.379406

42 16 1775 1759 167.504 196 1.46294 0.378691

43 16 1812 1796 167.05 148 1.32832 0.379431

44 16 1853 1837 166.98 164 0.0462176 0.379269

45 16 1889 1873 166.469 144 0.0446289 0.38064

46 16 1933 1917 166.676 176 0.290733 0.380603

47 16 1980 1964 167.129 188 1.59294 0.37949

48 16 2025 2009 167.396 180 0.347652 0.379555

49 16 2072 2056 167.816 188 1.12357 0.378849

50 16 2117 2101 168.06 180 0.841758 0.378656

51 16 2166 2150 168.607 196 0.334893 0.377469

52 16 2209 2193 168.672 172 0.237112 0.377007

53 16 2248 2232 168.432 156 0.368773 0.37749

54 16 2299 2283 169.09 204 0.712845 0.376165

55 16 2340 2324 168.997 164 0.0980192 0.3767

56 16 2382 2366 168.979 168 0.186426 0.37722

57 16 2430 2414 169.382 192 0.305417 0.375834

58 16 2469 2453 169.151 156 1.29456 0.375809

59 16 2503 2487 168.589 136 0.334266 0.376758

2019-08-14 16:55:08.361550 min lat: 0.0371012 max lat: 1.81811 avg lat: 0.376791

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

60 16 2553 2537 169.112 200 0.204253 0.376791

Total time run: 60.3843

Total writes made: 2554

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 169.183

Stddev Bandwidth: 20.7139

Max bandwidth (MB/sec): 220

Min bandwidth (MB/sec): 132

Average IOPS: 42

Stddev IOPS: 5.17848

Max IOPS: 55

Min IOPS: 33

Average Latency(s): 0.377725

Stddev Latency(s): 0.345649

Max latency(s): 1.81811

Min latency(s): 0.0371012

Cleaning up (deleting benchmark objects)

Removed 2554 objects

Clean up completed and total clean up time :2.36016