[SOLVED] CEPH how to delete dead monitor?

- Thread starter PanWaclaw

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

here is the ceph Doku for this

https://docs.ceph.com/docs/master/rados/operations/add-or-rm-mons/

here is the ceph Doku for this

https://docs.ceph.com/docs/master/rados/operations/add-or-rm-mons/

Hi,

here is the ceph Doku for this

https://docs.ceph.com/docs/master/rados/operations/add-or-rm-mons/

# ceph mon remove mars0

mon.mars0 does not exist or has already been removed

Code:

# ceph -s

cluster:

id: 39a2cdc7-9182-45ab-b0b1-5a2aae3be5b8

health: HEALTH_OK

services:

mon: 4 daemons, quorum mon0,mon3,mon2,mon1 (age 18h)Ceph ok, but on proxmox gui visible old unused monitor!!

Code:

# ceph mon dump

dumped monmap epoch 6

epoch 6

fsid 39a2cdc7-9182-45ab-b0b1-5a2aae3be5b8

last_changed 2019-12-05 02:32:33.029515

created 2019-12-05 02:01:11.347468

min_mon_release 14 (nautilus)

0: [v2:192.168.0.100:3300/0,v1:192.168.0.100:6789/0] mon.mon0

1: [v2:192.168.0.103:3300/0,v1:192.168.0.103:6789/0] mon.mon3

2: [v2:192.168.0.102:3300/0,v1:192.168.0.102:6789/0] mon.mon2

3: [v2:192.168.0.101:3300/0,v1:192.168.0.101:6789/0] mon.mon1# ls /etc/systemd/system/ceph-mon.target.wants/

ceph-mon@mars0.service ceph-mon@mon0.serviceIt`s solved by delete service on all nodes

rm /etc/systemd/system/ceph-mon.target.wants/ceph-mon@mars*THX

I've faced the same problem, on a cluster upgraded since PVE 5.x till 7.3 Pacific.

I failed removing mon.0. Had to remove it manually from command line and removing systemd symlink.

Other monitors (mon.1 and mon.2) went smoothly.

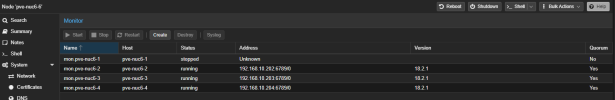

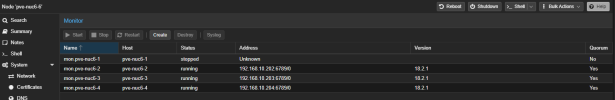

But now i still have mon.0 in the GUI with status and Address "Unknown".

Strange thing, in /etc/ceph/ceph.conf i have

How can I remove the stale mon.0 from gui ?

Thank you

I failed removing mon.0. Had to remove it manually from command line and removing systemd symlink.

Other monitors (mon.1 and mon.2) went smoothly.

But now i still have mon.0 in the GUI with status and Address "Unknown".

Strange thing, in /etc/ceph/ceph.conf i have

Code:

[mon.myhost01]

public_addr = 10.20.40.134

[mon.myhost02]

host = myhost02

mon_addr = 10.20.40.135

mon_data_avail_crit = 15

mon_data_avail_warn = 20

public_addr = 10.20.40.135

[mon.myhost03]

host = myhost03

mon_addr = 10.20.40.136

mon_data_avail_crit = 15

mon_data_avail_warn = 20

public_addr = 10.20.40.136How can I remove the stale mon.0 from gui ?

Thank you

Sorry, I thought it was a good idea, because googling sent me here.Please do not bump threads that are 3 years old in the future

Yes, it shows only the new created monitor (I destroyed and recreate to migrate to rocksdb):The systemd unit is gone?

Code:

ls -al /etc/systemd/system/ceph-mon.target.wants/

total 8

drwxr-xr-x 2 root root 4096 Dec 20 12:03 .

drwxr-xr-x 16 root root 4096 Dec 20 12:03 ..

lrwxrwxrwx 1 root root 37 Dec 20 12:03 ceph-mon@myhost01.service -> /lib/systemd/system/ceph-mon@.serviceIt is not present anymore in the ceph.conf file or the monmap?

Not present in ceph.conf

Code:

cat /etc/ceph/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.20.40.0/24

filestore_xattr_use_omap = true

fsid = f9cf1730-500b-42ab-896f-909692683dc6

mon_allow_pool_delete = true

mon_host = 10.20.40.135 10.20.40.136 10.20.40.134

ms_bind_ipv4 = true

osd_journal_size = 5120

osd_pool_default_min_size = 1

public_network = 10.20.40.0/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.myhost01]

public_addr = 10.20.40.134

[mon.myhost02]

host = myhost02

mon_addr = 10.20.40.135

mon_data_avail_crit = 15

mon_data_avail_warn = 20

public_addr = 10.20.40.135

[mon.myhost03]

host = myhost03

mon_addr = 10.20.40.136

mon_data_avail_crit = 15

mon_data_avail_warn = 20

public_addr = 10.20.40.136->> I know anything about monmap, sorry... <--

May be I have to delete this 'ceph-0' directory ?What about the/var/lib/ceph/mon/directory?

Code:

ls -la /var/lib/ceph/mon/

total 16

drwxr-xr-x 4 ceph ceph 4096 Dec 20 12:03 .

drwxr-x--- 13 ceph ceph 4096 Jul 23 10:04 ..

drwxr-xr-x 3 ceph ceph 4096 Oct 25 11:40 ceph-0

drwxr-xr-x 3 ceph ceph 4096 Dec 20 15:39 ceph-myhost01yeah, that looks like it is a remnant. Try to remove / move it somewhere else at first. I expect that the display in the UI will be gone after that.May be I have to delete this 'ceph-0' directory ?

SOLVED: removing the directory, clened the gui.yeah, that looks like it is a remnant. Try to remove / move it somewhere else at first. I expect that the display in the UI will be gone after that.

Code:

mv /var/lib/ceph/mon/ceph-0 ~Hi there,

I'm having the same issue: I cannot delete in Proxmox UI a monitor that I removed (dead node).

Ceph seems fine, I force removed the OSD and I'm about to drop the node from Proxmox cluster to reinstall it completely.

When I try to destroy, it says "No route to host" which is normal because the machine is completely dead and won't come back.

What can I do here?

Thanks,

D.

I'm having the same issue: I cannot delete in Proxmox UI a monitor that I removed (dead node).

Code:

root@pve-nuc6-6:~# ceph -s

cluster:

id: 6409e487-7478-4a93-abbb-12050760e67a

health: HEALTH_OK

services:

mon: 3 daemons, quorum pve-nuc6-2,pve-nuc6-3,pve-nuc6-4 (age 29m)

mgr: pve-nuc6-4(active, since 29h), standbys: pve-nuc6-5

mds: 1/1 daemons up, 1 standby

osd: 5 osds: 5 up (since 45m), 5 in (since 35m)

data:

volumes: 1/1 healthy

pools: 4 pools, 97 pgs

objects: 11.55k objects, 44 GiB

usage: 138 GiB used, 2.0 TiB / 2.1 TiB avail

pgs: 97 active+clean

io:

client: 102 KiB/s wr, 0 op/s rd, 18 op/s wrCeph seems fine, I force removed the OSD and I'm about to drop the node from Proxmox cluster to reinstall it completely.

When I try to destroy, it says "No route to host" which is normal because the machine is completely dead and won't come back.

What can I do here?

Thanks,

D.