Hello

Yesterday, I replace 4x1To disk with 4x2To (1 replacement per node) after 24H rebalancing. ceph seem stuck to 99.81% and always in warning

Can you help me to resolve errors.

#------------------

# ceph status

#------------------

cluster:

id: e7fc1497-5889-4aba-abc7-e0e1115d70ef

health: HEALTH_WARN

1 nearfull osd(s)

Low space hindering backfill (add storage if this doesn't resolve itself): 5 pgs backfill_toofull

Degraded data redundancy: 5554/1885612 objects degraded (0.295%), 5 pgs degraded, 5 pgs undersized

2 pool(s) nearfull

services:

mon: 4 daemons, quorum pve-01,pve-02,pve-03,pve-04 (age 22h)

mgr: pve-01(active, since 22h), standbys: pve-02, pve-04, pve-03

osd: 23 osds: 23 up (since 21h), 23 in (since 21h); 8 remapped pgs

data:

pools: 2 pools, 385 pgs

objects: 471.40k objects, 1.8 TiB

usage: 6.9 TiB used, 9.6 TiB / 16 TiB avail

pgs: 5554/1885612 objects degraded (0.295%)

4454/1885612 objects misplaced (0.236%)

377 active+clean

5 active+undersized+degraded+remapped+backfill_toofull

2 active+remapped+backfilling

1 active+remapped+backfill_wait

io:

client: 783 KiB/s wr, 0 op/s rd, 51 op/s wr

recovery: 124 MiB/s, 32 objects/s

#------------------

ceph osd df tree

#------------------

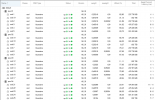

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS TYPE NAME

-1 11.69846 - 16 TiB 6.9 TiB 6.8 TiB 167 MiB 23 GiB 9.6 TiB 41.53 1.00 - root default

-3 2.73254 - 4.1 TiB 1.7 TiB 1.7 TiB 53 MiB 4.7 GiB 2.4 TiB 41.58 1.00 - host pve-01

1 ssd 0.23219 1.00000 238 GiB 124 GiB 124 GiB 2.9 MiB 293 MiB 113 GiB 52.28 1.26 24 up osd.1

2 ssd 0.23219 0.90002 238 GiB 185 GiB 185 GiB 8.7 MiB 403 MiB 53 GiB 77.84 1.87 38 up osd.2

3 ssd 0.46519 1.00000 476 GiB 261 GiB 260 GiB 35 KiB 780 MiB 216 GiB 54.69 1.32 55 up osd.3

4 ssd 0.46509 1.00000 476 GiB 277 GiB 276 GiB 41 MiB 736 MiB 200 GiB 58.08 1.40 67 up osd.4

5 ssd 0.46509 1.00000 931 GiB 300 GiB 299 GiB 67 KiB 1.1 GiB 631 GiB 32.27 0.78 69 up osd.5

19 ssd 0.87279 1.00000 1.8 TiB 609 GiB 607 GiB 36 KiB 1.4 GiB 1.2 TiB 32.67 0.79 132 up osd.19

-5 2.53595 - 4.1 TiB 1.7 TiB 1.7 TiB 44 MiB 5.7 GiB 2.4 TiB 41.68 1.00 - host pve-02

6 ssd 0.23219 1.00000 1.8 TiB 138 GiB 137 GiB 6 KiB 1.1 GiB 1.7 TiB 7.42 0.18 30 up osd.6

7 ssd 0.23219 1.00000 238 GiB 173 GiB 172 GiB 5.1 MiB 541 MiB 65 GiB 72.71 1.75 43 up osd.7

8 ssd 0.23219 0.95001 238 GiB 171 GiB 170 GiB 2.5 MiB 509 MiB 67 GiB 71.85 1.73 40 up osd.8

9 ssd 0.46509 1.00000 476 GiB 344 GiB 343 GiB 5.0 MiB 695 MiB 132 GiB 72.24 1.74 69 up osd.9

10 ssd 0.46509 1.00000 476 GiB 298 GiB 298 GiB 7.6 MiB 736 MiB 178 GiB 62.63 1.51 61 up osd.10

18 ssd 0.90919 1.00000 931 GiB 635 GiB 633 GiB 23 MiB 2.2 GiB 296 GiB 68.21 1.64 142 up osd.18

-7 2.09248 - 4.1 TiB 1.7 TiB 1.7 TiB 47 MiB 5.3 GiB 2.4 TiB 41.69 1.00 - host pve-03

12 ssd 0.23230 1.00000 1.8 TiB 185 GiB 184 GiB 7 KiB 1.3 GiB 1.6 TiB 9.93 0.24 43 up osd.12

13 ssd 0.23230 1.00000 238 GiB 180 GiB 180 GiB 3.6 MiB 370 MiB 57 GiB 75.88 1.83 39 up osd.13

14 ssd 0.23230 0.95001 238 GiB 180 GiB 179 GiB 3.8 MiB 644 MiB 58 GiB 75.68 1.82 34 up osd.14

15 ssd 0.46519 0.95001 476 GiB 372 GiB 371 GiB 29 MiB 678 MiB 104 GiB 78.11 1.88 77 up osd.15

16 ssd 0.46519 0.90002 476 GiB 411 GiB 410 GiB 11 MiB 877 MiB 65 GiB 86.26 2.08 92 up osd.16

17 ssd 0.46519 1.00000 931 GiB 432 GiB 430 GiB 54 KiB 1.6 GiB 499 GiB 46.38 1.12 100 up osd.17

-13 4.33748 - 4.1 TiB 1.7 TiB 1.7 TiB 23 MiB 7.1 GiB 2.4 TiB 41.17 0.99 - host pve-04

21 ssd 0.23289 1.00000 477 GiB 152 GiB 151 GiB 0 B 938 MiB 325 GiB 31.77 0.76 30 up osd.21

22 ssd 0.46579 1.00000 477 GiB 206 GiB 205 GiB 42 KiB 1.2 GiB 271 GiB 43.23 1.04 48 up osd.22

23 ssd 0.90970 0.95001 477 GiB 432 GiB 431 GiB 73 KiB 1.3 GiB 45 GiB 90.55 2.18 91 up osd.23

24 ssd 0.90970 1.00000 932 GiB 383 GiB 381 GiB 23 MiB 1.7 GiB 549 GiB 41.10 0.99 80 up osd.24

25 ssd 1.81940 1.00000 1.8 TiB 567 GiB 565 GiB 36 KiB 2.0 GiB 1.3 TiB 30.44 0.73 133 up osd.25

TOTAL 16 TiB 6.9 TiB 6.8 TiB 167 MiB 23 GiB 9.6 TiB 41.53

MIN/MAX VAR: 0.18/2.18 STDDEV: 26.53

#------------------

ceph osd pool get pool_vm size

#------------------

size: 4

#------------------

ceph osd pool get pool_vm min_size

#------------------

size: 2

thank you in advance

Yesterday, I replace 4x1To disk with 4x2To (1 replacement per node) after 24H rebalancing. ceph seem stuck to 99.81% and always in warning

Can you help me to resolve errors.

#------------------

# ceph status

#------------------

cluster:

id: e7fc1497-5889-4aba-abc7-e0e1115d70ef

health: HEALTH_WARN

1 nearfull osd(s)

Low space hindering backfill (add storage if this doesn't resolve itself): 5 pgs backfill_toofull

Degraded data redundancy: 5554/1885612 objects degraded (0.295%), 5 pgs degraded, 5 pgs undersized

2 pool(s) nearfull

services:

mon: 4 daemons, quorum pve-01,pve-02,pve-03,pve-04 (age 22h)

mgr: pve-01(active, since 22h), standbys: pve-02, pve-04, pve-03

osd: 23 osds: 23 up (since 21h), 23 in (since 21h); 8 remapped pgs

data:

pools: 2 pools, 385 pgs

objects: 471.40k objects, 1.8 TiB

usage: 6.9 TiB used, 9.6 TiB / 16 TiB avail

pgs: 5554/1885612 objects degraded (0.295%)

4454/1885612 objects misplaced (0.236%)

377 active+clean

5 active+undersized+degraded+remapped+backfill_toofull

2 active+remapped+backfilling

1 active+remapped+backfill_wait

io:

client: 783 KiB/s wr, 0 op/s rd, 51 op/s wr

recovery: 124 MiB/s, 32 objects/s

#------------------

ceph osd df tree

#------------------

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS TYPE NAME

-1 11.69846 - 16 TiB 6.9 TiB 6.8 TiB 167 MiB 23 GiB 9.6 TiB 41.53 1.00 - root default

-3 2.73254 - 4.1 TiB 1.7 TiB 1.7 TiB 53 MiB 4.7 GiB 2.4 TiB 41.58 1.00 - host pve-01

1 ssd 0.23219 1.00000 238 GiB 124 GiB 124 GiB 2.9 MiB 293 MiB 113 GiB 52.28 1.26 24 up osd.1

2 ssd 0.23219 0.90002 238 GiB 185 GiB 185 GiB 8.7 MiB 403 MiB 53 GiB 77.84 1.87 38 up osd.2

3 ssd 0.46519 1.00000 476 GiB 261 GiB 260 GiB 35 KiB 780 MiB 216 GiB 54.69 1.32 55 up osd.3

4 ssd 0.46509 1.00000 476 GiB 277 GiB 276 GiB 41 MiB 736 MiB 200 GiB 58.08 1.40 67 up osd.4

5 ssd 0.46509 1.00000 931 GiB 300 GiB 299 GiB 67 KiB 1.1 GiB 631 GiB 32.27 0.78 69 up osd.5

19 ssd 0.87279 1.00000 1.8 TiB 609 GiB 607 GiB 36 KiB 1.4 GiB 1.2 TiB 32.67 0.79 132 up osd.19

-5 2.53595 - 4.1 TiB 1.7 TiB 1.7 TiB 44 MiB 5.7 GiB 2.4 TiB 41.68 1.00 - host pve-02

6 ssd 0.23219 1.00000 1.8 TiB 138 GiB 137 GiB 6 KiB 1.1 GiB 1.7 TiB 7.42 0.18 30 up osd.6

7 ssd 0.23219 1.00000 238 GiB 173 GiB 172 GiB 5.1 MiB 541 MiB 65 GiB 72.71 1.75 43 up osd.7

8 ssd 0.23219 0.95001 238 GiB 171 GiB 170 GiB 2.5 MiB 509 MiB 67 GiB 71.85 1.73 40 up osd.8

9 ssd 0.46509 1.00000 476 GiB 344 GiB 343 GiB 5.0 MiB 695 MiB 132 GiB 72.24 1.74 69 up osd.9

10 ssd 0.46509 1.00000 476 GiB 298 GiB 298 GiB 7.6 MiB 736 MiB 178 GiB 62.63 1.51 61 up osd.10

18 ssd 0.90919 1.00000 931 GiB 635 GiB 633 GiB 23 MiB 2.2 GiB 296 GiB 68.21 1.64 142 up osd.18

-7 2.09248 - 4.1 TiB 1.7 TiB 1.7 TiB 47 MiB 5.3 GiB 2.4 TiB 41.69 1.00 - host pve-03

12 ssd 0.23230 1.00000 1.8 TiB 185 GiB 184 GiB 7 KiB 1.3 GiB 1.6 TiB 9.93 0.24 43 up osd.12

13 ssd 0.23230 1.00000 238 GiB 180 GiB 180 GiB 3.6 MiB 370 MiB 57 GiB 75.88 1.83 39 up osd.13

14 ssd 0.23230 0.95001 238 GiB 180 GiB 179 GiB 3.8 MiB 644 MiB 58 GiB 75.68 1.82 34 up osd.14

15 ssd 0.46519 0.95001 476 GiB 372 GiB 371 GiB 29 MiB 678 MiB 104 GiB 78.11 1.88 77 up osd.15

16 ssd 0.46519 0.90002 476 GiB 411 GiB 410 GiB 11 MiB 877 MiB 65 GiB 86.26 2.08 92 up osd.16

17 ssd 0.46519 1.00000 931 GiB 432 GiB 430 GiB 54 KiB 1.6 GiB 499 GiB 46.38 1.12 100 up osd.17

-13 4.33748 - 4.1 TiB 1.7 TiB 1.7 TiB 23 MiB 7.1 GiB 2.4 TiB 41.17 0.99 - host pve-04

21 ssd 0.23289 1.00000 477 GiB 152 GiB 151 GiB 0 B 938 MiB 325 GiB 31.77 0.76 30 up osd.21

22 ssd 0.46579 1.00000 477 GiB 206 GiB 205 GiB 42 KiB 1.2 GiB 271 GiB 43.23 1.04 48 up osd.22

23 ssd 0.90970 0.95001 477 GiB 432 GiB 431 GiB 73 KiB 1.3 GiB 45 GiB 90.55 2.18 91 up osd.23

24 ssd 0.90970 1.00000 932 GiB 383 GiB 381 GiB 23 MiB 1.7 GiB 549 GiB 41.10 0.99 80 up osd.24

25 ssd 1.81940 1.00000 1.8 TiB 567 GiB 565 GiB 36 KiB 2.0 GiB 1.3 TiB 30.44 0.73 133 up osd.25

TOTAL 16 TiB 6.9 TiB 6.8 TiB 167 MiB 23 GiB 9.6 TiB 41.53

MIN/MAX VAR: 0.18/2.18 STDDEV: 26.53

#------------------

ceph osd pool get pool_vm size

#------------------

size: 4

#------------------

ceph osd pool get pool_vm min_size

#------------------

size: 2

thank you in advance

Last edited: