Hi, after a recent upgrade to Proxmox 7.2-4.

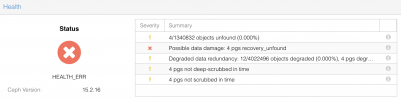

Observed on Datacenter > Ceph > Health.

# ceph health detail

HEALTH_ERR 4/1340831 objects unfound (0.000%); Possible data damage: 4 pgs recovery_unfound; Degraded data redundancy: 12/4022493 objects degraded (0.000%), 4 pgs degraded; 4 pgs not deep-scrubbed in time; 4 pgs not scrubbed in time

[WRN] OBJECT_UNFOUND: 4/1340831 objects unfound (0.000%)

pg 5.12a has 1 unfound objects

pg 5.18b has 1 unfound objects

pg 5.1b2 has 1 unfound objects

pg 5.1e1 has 1 unfound objects

[ERR] PG_DAMAGED: Possible data damage: 4 pgs recovery_unfound

pg 5.12a is active+recovery_unfound+degraded, acting [9,27,47], 1 unfound

pg 5.18b is active+recovery_unfound+degraded, acting [9,47,61], 1 unfound

pg 5.1b2 is active+recovery_unfound+degraded, acting [41,59,9], 1 unfound

pg 5.1e1 is active+recovery_unfound+degraded, acting [45,31,59], 1 unfound

[WRN] PG_DEGRADED: Degraded data redundancy: 12/4022493 objects degraded (0.000%), 4 pgs degraded

pg 5.12a is active+recovery_unfound+degraded, acting [9,27,47], 1 unfound

pg 5.18b is active+recovery_unfound+degraded, acting [9,47,61], 1 unfound

pg 5.1b2 is active+recovery_unfound+degraded, acting [41,59,9], 1 unfound

pg 5.1e1 is active+recovery_unfound+degraded, acting [45,31,59], 1 unfound

[WRN] PG_NOT_DEEP_SCRUBBED: 4 pgs not deep-scrubbed in time

pg 5.12a not deep-scrubbed since 2022-04-15T02:41:18.066401+0800

pg 5.18b not deep-scrubbed since 2022-04-15T13:00:11.502126+0800

pg 5.1b2 not deep-scrubbed since 2022-04-17T21:35:10.782837+0800

pg 5.1e1 not deep-scrubbed since 2022-04-14T17:39:09.773056+0800

[WRN] PG_NOT_SCRUBBED: 4 pgs not scrubbed in time

pg 5.12a not scrubbed since 2022-04-18T13:13:00.401312+0800

pg 5.18b not scrubbed since 2022-04-17T23:09:40.972708+0800

pg 5.1b2 not scrubbed since 2022-04-17T21:35:10.782837+0800

pg 5.1e1 not scrubbed since 2022-04-18T13:18:44.533226+0800

The nodes and VMs all seem to be functioning well. Is there any cause for concern?

How can I go about troubleshooting and clearing the error if possible.

Any pointers are appreciated.

Thanks!

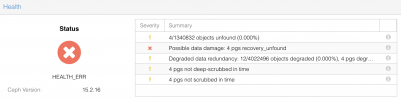

Observed on Datacenter > Ceph > Health.

# ceph health detail

HEALTH_ERR 4/1340831 objects unfound (0.000%); Possible data damage: 4 pgs recovery_unfound; Degraded data redundancy: 12/4022493 objects degraded (0.000%), 4 pgs degraded; 4 pgs not deep-scrubbed in time; 4 pgs not scrubbed in time

[WRN] OBJECT_UNFOUND: 4/1340831 objects unfound (0.000%)

pg 5.12a has 1 unfound objects

pg 5.18b has 1 unfound objects

pg 5.1b2 has 1 unfound objects

pg 5.1e1 has 1 unfound objects

[ERR] PG_DAMAGED: Possible data damage: 4 pgs recovery_unfound

pg 5.12a is active+recovery_unfound+degraded, acting [9,27,47], 1 unfound

pg 5.18b is active+recovery_unfound+degraded, acting [9,47,61], 1 unfound

pg 5.1b2 is active+recovery_unfound+degraded, acting [41,59,9], 1 unfound

pg 5.1e1 is active+recovery_unfound+degraded, acting [45,31,59], 1 unfound

[WRN] PG_DEGRADED: Degraded data redundancy: 12/4022493 objects degraded (0.000%), 4 pgs degraded

pg 5.12a is active+recovery_unfound+degraded, acting [9,27,47], 1 unfound

pg 5.18b is active+recovery_unfound+degraded, acting [9,47,61], 1 unfound

pg 5.1b2 is active+recovery_unfound+degraded, acting [41,59,9], 1 unfound

pg 5.1e1 is active+recovery_unfound+degraded, acting [45,31,59], 1 unfound

[WRN] PG_NOT_DEEP_SCRUBBED: 4 pgs not deep-scrubbed in time

pg 5.12a not deep-scrubbed since 2022-04-15T02:41:18.066401+0800

pg 5.18b not deep-scrubbed since 2022-04-15T13:00:11.502126+0800

pg 5.1b2 not deep-scrubbed since 2022-04-17T21:35:10.782837+0800

pg 5.1e1 not deep-scrubbed since 2022-04-14T17:39:09.773056+0800

[WRN] PG_NOT_SCRUBBED: 4 pgs not scrubbed in time

pg 5.12a not scrubbed since 2022-04-18T13:13:00.401312+0800

pg 5.18b not scrubbed since 2022-04-17T23:09:40.972708+0800

pg 5.1b2 not scrubbed since 2022-04-17T21:35:10.782837+0800

pg 5.1e1 not scrubbed since 2022-04-18T13:18:44.533226+0800

The nodes and VMs all seem to be functioning well. Is there any cause for concern?

How can I go about troubleshooting and clearing the error if possible.

Any pointers are appreciated.

Thanks!