I've noticed when creating an OSD on an HDD, it starts out showing about 10% usage, and stays about 10% higher? We are using an SSD for the DB and therefore WAL but it seems like it shouldn't count that...? Also, the size is shown lower in the OSD "details" than the OSD page in PVE.

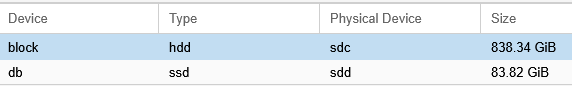

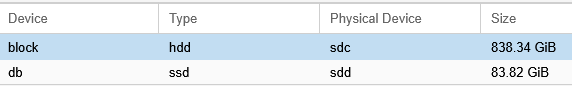

Screen cap of osd.7 details:

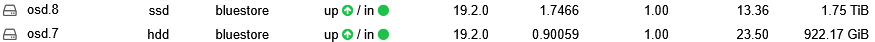

in PVE:

All the SSD only OSDs start at 0%. In this cluster all the SSDs are ~13% used and the few HDDs are all ~23% used.

(and yes we're aware of the bug in 19.2.0, and are recreating them all)

Thanks.

Screen cap of osd.7 details:

in PVE:

All the SSD only OSDs start at 0%. In this cluster all the SSDs are ~13% used and the few HDDs are all ~23% used.

(and yes we're aware of the bug in 19.2.0, and are recreating them all)

Thanks.