Hi,

We've installed a 3 node Proxmox cluster running Ceph. On the cluster we have two VM's. When we reboot all PVE nodes, the IOWait of the VM's drops to pretty much nothing.

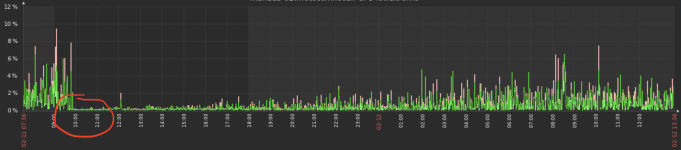

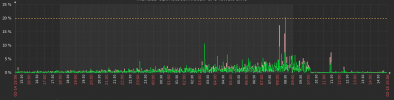

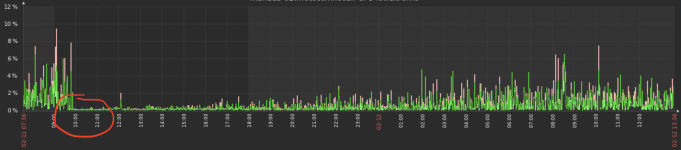

However over time, the IOWait creeps up - there is no load on these VM's. Any idea why this might be? As you can see it's a consistent increase and happens across both VMs. The circle I've highlighted is after all Ceph nodes are rebooted.

Any ideas why this might be / a way I can diagnose this?

We've installed a 3 node Proxmox cluster running Ceph. On the cluster we have two VM's. When we reboot all PVE nodes, the IOWait of the VM's drops to pretty much nothing.

However over time, the IOWait creeps up - there is no load on these VM's. Any idea why this might be? As you can see it's a consistent increase and happens across both VMs. The circle I've highlighted is after all Ceph nodes are rebooted.

Any ideas why this might be / a way I can diagnose this?