Hello all,

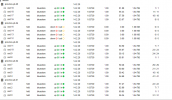

We are running a cluster of 5 nodes in our environment.

We have a CEPH storage managed in the cluster , for each node we are having 4 OSD's so basically 20 OSD's

Each node is a bare metal with proxmox ve-6.4-8 configured with

OSD's you guess are Physical HDD disks of 10krpm each one is 1.8T

OSD's are managed under HBA mode Raid under p440ar controller along with 2 journalist disks as well in each server. (HPE DL380 GEN9)

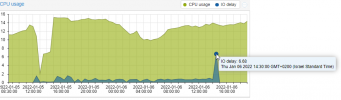

Always before it get kicked out of CEPH we are getting the following messages... for Example (This one happened today a few hours ago so it pretty fresh) :

We are facing those kind of errors for the past month which in former scenarios with the same logs output, via ILO we saw that Server Controller stated as Failure

1. We were replacing controller at all servers(Yes it suddenly happens out of the blue every time in a different server something like between 1-3 days).

since we believed it will solve the problems.

But I guess it has nothing to do with it.

2. We did other server's modifications via BIOS as we believed it was related to power management and power consume.

3. We have upgraded ROM bios to latets version but nothing helps

4. Last action we took yesterday: I have upgraded the kernel from 5.4.119-1-pve to 5.4.124-1-pve.

So now we are getting the same errors the same logs and the one thing extrordinary was, that now controller in ILO is not stated as Failure(crash) but still OSD's got left out of the CEPH storage Array ( so yes we were moving one step forward but still getting no where )

Means that controller is fine and the issue is much deeper then i think

Has someone faced this phenomenon and knows how to help?

Or at least can someone guide me to find the solution for it

Thank you very much in advance, looking forward to any suggestion and way of debugging this.

We are running a cluster of 5 nodes in our environment.

We have a CEPH storage managed in the cluster , for each node we are having 4 OSD's so basically 20 OSD's

Each node is a bare metal with proxmox ve-6.4-8 configured with

| CPU(s) 72 x Intel(R) Xeon(R) CPU E5-2699 v3 @ 2.30GHz (2 Sockets) |

| Kernel Version Linux 5.4.124-1-pve #1 SMP PVE 5.4.124-2 ) |

| PVE Manager Version - pve-manager/6.4-8/185e14db |

OSD's you guess are Physical HDD disks of 10krpm each one is 1.8T

OSD's are managed under HBA mode Raid under p440ar controller along with 2 journalist disks as well in each server. (HPE DL380 GEN9)

Always before it get kicked out of CEPH we are getting the following messages... for Example (This one happened today a few hours ago so it pretty fresh) :

Code:

Jan 5 14:00:58 proxmox-pt-04 kernel: [ 6487.090537] perf: interrupt took too long (2513 > 2500), lowering kernel.perf_event_max_sample_rate to 79500

Jan 5 14:19:34 proxmox-pt-04 kernel: [ 7602.440481] perf: interrupt took too long (3181 > 3141), lowering kernel.perf_event_max_sample_rate to 62750

Jan 5 14:43:55 proxmox-pt-04 kernel: [ 9064.208695] perf: interrupt took too long (4002 > 3976), lowering kernel.perf_event_max_sample_rate to 49750

Jan 5 15:10:52 proxmox-pt-04 kernel: [10680.638887] perf: interrupt took too long (5034 > 5002), lowering kernel.perf_event_max_sample_rate to 39500

Jan 5 15:44:29 proxmox-pt-04 kernel: [12697.917621] perf: interrupt took too long (6295 > 6292), lowering kernel.perf_event_max_sample_rate to 31750

Jan 5 16:47:31 proxmox-pt-04 kernel: [16479.533221] perf: interrupt took too long (7878 > 7868), lowering kernel.perf_event_max_sample_rate to 25250

Jan 6 00:00:08 proxmox-pt-04 rsyslogd: [origin software="rsyslogd" swVersion="8.1901.0" x-pid="1628" x-info="https://www.rsyslog.com"] rsyslogd was HUPed

Jan 6 03:40:22 proxmox-pt-04 kernel: [55650.159352] perf: interrupt took too long (12494 > 9847), lowering kernel.perf_event_max_sample_rate to 16000

Jan 6 14:15:59 proxmox-pt-04 kernel: [93787.006146] hpsa 0000:03:00.0: scsi 2:0:1:0: resetting physical Direct-Access HGST HUC101818CS4201 PHYS DRV SSDSmartPathCap- En- Exp=1

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.321921] hpsa 0000:03:00.0: Controller lockup detected: 0x00130000 after 30

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.321967] hpsa 0000:03:00.0: controller lockup detected: LUN:0000000000800001 CDB:01030000000000000000000000000000

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.321972] hpsa 0000:03:00.0: Controller lockup detected during reset wait

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.321979] hpsa 0000:03:00.0: scsi 2:0:1:0: reset physical failed Direct-Access HGST HUC101818CS4201 PHYS DRV SSDSmartPathCap- En- Exp=1

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322045] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322048] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322050] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322052] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322054] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322055] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322057] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322059] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322060] sd 2:0:6:0: Device offlined - not ready after error recovery

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322521] sd 2:0:6:0: [sdf] tag#768 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_TIMEOUT

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322524] sd 2:0:6:0: [sdf] tag#768 CDB: Write(10) 2a 00 44 15 99 00 00 02 00 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322643] sd 2:0:6:0: [sdf] tag#769 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_TIMEOUT

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322645] sd 2:0:6:0: [sdf] tag#769 CDB: Write(10) 2a 00 44 15 9b 00 00 01 80 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322739] sd 2:0:6:0: [sdf] tag#770 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_TIMEOUT

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322742] sd 2:0:6:0: [sdf] tag#770 CDB: Write(10) 2a 00 44 15 9c 80 00 02 00 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322834] sd 2:0:6:0: [sdf] tag#829 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_TIMEOUT

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322837] sd 2:0:6:0: [sdf] tag#829 CDB: Write(10) 2a 00 02 41 8c 80 00 02 00 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322926] sd 2:0:6:0: [sdf] tag#830 FAILED Result: hostbyte=DID_OK driverbyte=DRIVER_TIMEOUT

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.322929] sd 2:0:6:0: [sdf] tag#830 CDB: Write(10) 2a 00 44 15 97 80 00 01 80 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323019] sd 2:0:6:0: [sdf] tag#320 FAILED Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323022] sd 2:0:6:0: [sdf] tag#320 CDB: Write(10) 2a 00 44 15 94 00 00 02 00 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323114] sd 2:0:6:0: [sdf] tag#321 FAILED Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323117] sd 2:0:6:0: [sdf] tag#321 CDB: Write(10) 2a 00 44 15 96 00 00 01 80 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323208] sd 2:0:6:0: [sdf] tag#371 FAILED Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323322] sd 2:0:6:0: [sdf] tag#371 CDB: Write(10) 2a 00 44 15 7d 80 00 01 80 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323366] PGD 0 P4D 0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323440] sd 2:0:6:0: [sdf] tag#372 FAILED Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323470] Oops: 0000 [#1] SMP PTI

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323495] sd 2:0:6:0: [sdf] tag#372 CDB: Write(10) 2a 00 44 15 7f 00 00 02 00 00

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323573] CPU: 60 PID: 547705 Comm: kworker/u144:3 Tainted: P O 5.4.124-1-pve #1

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323846] Hardware name: HP ProLiant DL380 Gen9/ProLiant DL380 Gen9, BIOS P89 04/29/2021

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323913] Workqueue: monitor_0_hpsa hpsa_monitor_ctlr_worker [hpsa]

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.323966] RIP: 0010:intel_unmap_sg+0x21/0x110

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324001] Code: 41 5d 41 5e 41 5f 5d c3 90 0f 1f 44 00 00 55 48 89 e5 41 57 4d 89 c7 41 56 41 89 ce 41 55 49 89 fd 41 54 53 89 d3 48 83 ec 08 <4c> 8b 66 10 48 89 75 d0 e8 02 fc ff ff 48 8b 75 d0 49 81 e4 00 f0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324119] RSP: 0018:ffffa9fd4862bd80 EFLAGS: 00010282

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324157] RAX: ffffffff934b8af0 RBX: 0000000000000040 RCX: 0000000000000001

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324206] RDX: 0000000000000040 RSI: 0000000000000000 RDI: ffff8af3fa11b0b0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324254] RBP: ffffa9fd4862bdb0 R08: 0000000000000000 R09: 00000000000cad3f

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324303] R10: 0000000000000001 R11: 0000000000000000 R12: ffff8ae3e52bc120

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324351] R13: ffff8af3fa11b0b0 R14: 0000000000000001 R15: 0000000000000000

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324399] FS: 0000000000000000(0000) GS:ffff8af3ff800000(0000) knlGS:0000000000000000

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324454] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.324494] CR2: 0000000000000010 CR3: 0000001e9440a002 CR4: 00000000001626e0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.326277] Call Trace:

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331227] scsi_dma_unmap+0x54/0x80

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331236] complete_scsi_command+0x79/0x8c0 [hpsa]

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331241] detect_controller_lockup+0x1c0/0x210 [hpsa]

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331245] hpsa_monitor_ctlr_worker+0x1d/0x90 [hpsa]

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331254] process_one_work+0x20f/0x3d0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331259] worker_thread+0x34/0x400

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331264] kthread+0x120/0x140

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331268] ? process_one_work+0x3d0/0x3d0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.331277] ? kthread_park+0x90/0x90

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.338861] ret_from_fork+0x35/0x40

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.338864] Modules linked in: joydev input_leds hid_generic usbmouse usbkbd usbhid hid vfio_pci vfio_virqfd vfio_iommu_type1 vfio nfsv3 rpcsec_gss_krb5 nfsv4 nfs fscache ixgbevf ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables sctp iptable_filter bpfilter sch_ingress 8021q garp mrp bonding openvswitch nsh nf_conncount nf_nat nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 softdog nfnetlink_log nfnetlink xfs ipmi_ssif intel_rapl_msr intel_rapl_common sb_edac x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel kvm irqbypass crct10dif_pclmul crc32_pclmul ghash_clmulni_intel aesni_intel crypto_simd cryptd glue_helper rapl intel_cstate mgag200 drm_vram_helper ttm pcspkr drm_kms_helper drm i2c_algo_bit fb_sys_fops syscopyarea sysfillrect sysimgblt hpilo ioatdma ipmi_si ipmi_devintf ipmi_msghandler acpi_power_meter mac_hid zfs(PO) zunicode(PO) zzstd(O) zlua(O) zavl(PO) icp(PO) zcommon(PO) znvpair(PO) spl(O) vhost_net vhost tap ib_iser rdma_cm iw_cm nfsd ib_cm

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.338942] auth_rpcgss nfs_acl ib_core lockd grace sunrpc iscsi_tcp libiscsi_tcp libiscsi scsi_transport_iscsi ip_tables x_tables autofs4 btrfs xor zstd_compress raid6_pq dm_thin_pool dm_persistent_data dm_bio_prison dm_bufio libcrc32c ses enclosure ahci xhci_pci uhci_hcd lpc_ich i2c_i801 libahci ehci_pci tg3 xhci_hcd ehci_hcd ixgbe xfrm_algo hpsa dca mdio scsi_transport_sas wmi

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.382876] CR2: 0000000000000010

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.384689] ---[ end trace e310ccd95d1d6f27 ]---

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.399042] RIP: 0010:intel_unmap_sg+0x21/0x110

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.400876] Code: 41 5d 41 5e 41 5f 5d c3 90 0f 1f 44 00 00 55 48 89 e5 41 57 4d 89 c7 41 56 41 89 ce 41 55 49 89 fd 41 54 53 89 d3 48 83 ec 08 <4c> 8b 66 10 48 89 75 d0 e8 02 fc ff ff 48 8b 75 d0 49 81 e4 00 f0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.404539] RSP: 0018:ffffa9fd4862bd80 EFLAGS: 00010282

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.406339] RAX: ffffffff934b8af0 RBX: 0000000000000040 RCX: 0000000000000001

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.408129] RDX: 0000000000000040 RSI: 0000000000000000 RDI: ffff8af3fa11b0b0

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.409922] RBP: ffffa9fd4862bdb0 R08: 0000000000000000 R09: 00000000000cad3f

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.412159] R10: 0000000000000001 R11: 0000000000000000 R12: ffff8ae3e52bc120

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.413872] R13: ffff8af3fa11b0b0 R14: 0000000000000001 R15: 0000000000000000

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.415560] FS: 0000000000000000(0000) GS:ffff8af3ff800000(0000) knlGS:0000000000000000

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.417260] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Jan 6 14:16:35 proxmox-pt-04 kernel: [93823.418966] CR2: 0000000000000010 CR3: 0000001e9440a002 CR4: 00000000001626e0

Jan 6 14:16:47 proxmox-pt-04 kernel: [93835.177936] print_req_error: 249 callbacks suppressedWe are facing those kind of errors for the past month which in former scenarios with the same logs output, via ILO we saw that Server Controller stated as Failure

1. We were replacing controller at all servers(Yes it suddenly happens out of the blue every time in a different server something like between 1-3 days).

since we believed it will solve the problems.

But I guess it has nothing to do with it.

2. We did other server's modifications via BIOS as we believed it was related to power management and power consume.

3. We have upgraded ROM bios to latets version but nothing helps

4. Last action we took yesterday: I have upgraded the kernel from 5.4.119-1-pve to 5.4.124-1-pve.

So now we are getting the same errors the same logs and the one thing extrordinary was, that now controller in ILO is not stated as Failure(crash) but still OSD's got left out of the CEPH storage Array ( so yes we were moving one step forward but still getting no where )

Means that controller is fine and the issue is much deeper then i think

Has someone faced this phenomenon and knows how to help?

Or at least can someone guide me to find the solution for it

Thank you very much in advance, looking forward to any suggestion and way of debugging this.