Hello Proxmox/Ceph admins,

well, i´m not a newbie anymore with Proxmox, still like to learn and now need little help with Ceph pools.

i´ve got three 3-node Ceph clusters here, all separate and in different sites. - all nodes are on Ceph 12.2.13 and PVE 6.4-13

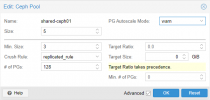

all have one pool and 3/2 replica size config, 128 PGs, 5tb data, 12 OSDs.

But i like to have 5/3 replica size.

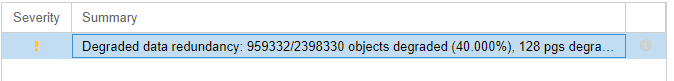

if i change to 5/3 Ceph will tell me that i have 40% degraded PGs.

~# ceph health

HEALTH_WARN Degraded data redundancy: 959332/2398330 objects degraded (40.000%), 128 pgs degraded, 128 pgs undersized

~#

it does not matter what i configure, only 3/2 size will lead to a "green" health.

this is how i start implementing the change:

Could somebody give me a hint to understand what i´m doing wrong please? - (i´d done a lot of reading already but have not found a way forward)

thank you for helping me out.

Stephan

well, i´m not a newbie anymore with Proxmox, still like to learn and now need little help with Ceph pools.

i´ve got three 3-node Ceph clusters here, all separate and in different sites. - all nodes are on Ceph 12.2.13 and PVE 6.4-13

all have one pool and 3/2 replica size config, 128 PGs, 5tb data, 12 OSDs.

But i like to have 5/3 replica size.

if i change to 5/3 Ceph will tell me that i have 40% degraded PGs.

~# ceph health

HEALTH_WARN Degraded data redundancy: 959332/2398330 objects degraded (40.000%), 128 pgs degraded, 128 pgs undersized

~#

it does not matter what i configure, only 3/2 size will lead to a "green" health.

this is how i start implementing the change:

Could somebody give me a hint to understand what i´m doing wrong please? - (i´d done a lot of reading already but have not found a way forward)

thank you for helping me out.

Stephan

Attachments

Last edited: