I have set up a three note Proxmox cluster with ceph and a pool (size 3, min_size 2) for testing. OSDs are two NVMe disks on each server with a capacity of 3.5TiB each. ceph has one 10G network connection each for public and private network. The public network is also the LAN bridge for my VMs.

The OSDs deliver, too:

I created pools with various PG settings, and performance wise ended up sticking with size 3, min. size 2, autoscale off, PGs 128/128 with the default replicated_rule.

The rados benchmark I ran gave me the following throughput here:

(10G network saturated in all non-4K-blocksize tests, as expected)

I then cleaned the pool before testing 4KB blocks.

And measured with 4K blocks (network never saturates here):

So far, so good. Next I created both a Ubuntu 20.04 LXC container and a Ubuntu 20.04 VM,neither has a network configured. The root disks were created on the ceph rdb pool with default settings. Inside those I did simple dd commands:

LXC

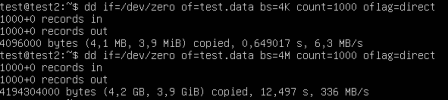

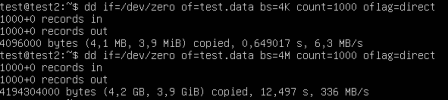

VM

For one, I'm not sure why the VM gets better 4M write performance than the LXC, but more...these values are a long, long throw away from the rados bench values my ceph setup produced before, the 4K blocksize performance in particular is terrible. None of the dd calls saturated either of the network connections at all, either, so I'm guessing it's not ceph itself that's the bottleneck here.

What can I do to improve especially small file performance inside my virtual machines/containers? How am I getting only about 1/10th of the rados bench performance from inside them at 4K blocksize?

Code:

# hdparm -tT --direct /dev/nvme1n1

/dev/nvme1n1:

Timing O_DIRECT cached reads: 5000 MB in 2.00 seconds = 2500.62 MB/sec

Timing O_DIRECT disk reads: 7936 MB in 3.00 seconds = 2645.14 MB/secThe OSDs deliver, too:

Code:

# ceph tell osd.0 bench

{

"bytes_written": 1073741824,

"blocksize": 4194304,

"elapsed_sec": 0.30341430800000002,

"bytes_per_sec": 3538863513.3185611,

"iops": 843.73081047977473

}I created pools with various PG settings, and performance wise ended up sticking with size 3, min. size 2, autoscale off, PGs 128/128 with the default replicated_rule.

The rados benchmark I ran gave me the following throughput here:

(10G network saturated in all non-4K-blocksize tests, as expected)

Code:

rados bench -p bench 120 write --no-cleanup -> 1092 MiB/s

rados bench -p bench 60 seq -> 1529 MiB/s

rados bench -p bench 60 rand -> 1524 MiB/sI then cleaned the pool before testing 4KB blocks.

Code:

rados -p bench cleanupAnd measured with 4K blocks (network never saturates here):

Code:

rados bench -p bench 120 write --no-cleanup -b 4K -> 65 MiB/s

rados bench -p bench 60 seq -> 258 MiB/s

rados bench -p bench 60 rand -> 271 MiB/sSo far, so good. Next I created both a Ubuntu 20.04 LXC container and a Ubuntu 20.04 VM,neither has a network configured. The root disks were created on the ceph rdb pool with default settings. Inside those I did simple dd commands:

LXC

Code:

# dd if=/dev/zero of=test.data bs=4M count=1000 oflag=direct

1000+0 records in

1000+0 records out

4194304000 bytes (4.2 GB, 3.9 GiB) copied, 15.7207 s, 267 MB/s

# dd if=/dev/zero of=test.data bs=4K count=100000 oflag=direct

100000+0 records in

100000+0 records out

409600000 bytes (410 MB, 391 MiB) copied, 51.1674 s, 8.0 MB/sVM

For one, I'm not sure why the VM gets better 4M write performance than the LXC, but more...these values are a long, long throw away from the rados bench values my ceph setup produced before, the 4K blocksize performance in particular is terrible. None of the dd calls saturated either of the network connections at all, either, so I'm guessing it's not ceph itself that's the bottleneck here.

What can I do to improve especially small file performance inside my virtual machines/containers? How am I getting only about 1/10th of the rados bench performance from inside them at 4K blocksize?

Last edited: