I updated proxmox to latest version today and after the system rebooted none of my VMs would start. They all reported:

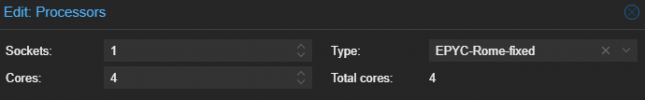

I fixed it by changing CPU to "host" but would like to know why EPYC-ROME doesn't work anymore. I didn't change the CPU.

Any info would be appreciated

Found a post that said it's because a CPU flag was not supported. I have EPYC-ROME CPU so was using that and it worked fine. What happened?kvm: warning: host doesn't support requested feature: CPUID.0DH:EAX.xsaves [bit 3]

kvm: Host doesn't support requested features

TASK ERROR: start failed: QEMU exited with code 1

I fixed it by changing CPU to "host" but would like to know why EPYC-ROME doesn't work anymore. I didn't change the CPU.

Any info would be appreciated