i've this weird issue with live migrating where pve can't do it unless i log into exactly the source node when doing the migration; connecting to any other node in this 5-node cluster makes the migration fail. using 'qm migrate' also fails; it can only be done via gui. this is so weird i don't even know where to start looking.

firstly, the issue seems to center around pve thinking the vm disks are "attached" storage. they're clearly not, they're regular zfs volumes like everything else.

when connected to the gui via any other node, here is what the migration window says:

vm is of course powered on. this remains, and the 'migrate' button remains greyed out, even after i select an appropriate target storage. the target storge for target nodes do populate correctly.

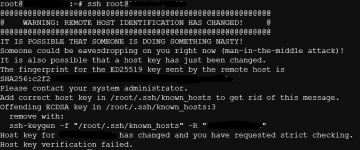

here is the error in detail when i try to start the migration via shell using qm migrate:

when i connect to the gui via the source node, i can use the gui to migrate the vm, but not qm migrate. any ideas?

firstly, the issue seems to center around pve thinking the vm disks are "attached" storage. they're clearly not, they're regular zfs volumes like everything else.

when connected to the gui via any other node, here is what the migration window says:

vm is of course powered on. this remains, and the 'migrate' button remains greyed out, even after i select an appropriate target storage. the target storge for target nodes do populate correctly.

here is the error in detail when i try to start the migration via shell using qm migrate:

when i connect to the gui via the source node, i can use the gui to migrate the vm, but not qm migrate. any ideas?