[SOLVED] Can't create new VM with VirtIO Block

- Thread starter sirkope

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Before I destroy my disk:

root@pve:~# wipefs /dev/sdb

DEVICE OFFSET TYPE UUID LABEL

sdb 0x1fe dos

root@pve:~# wipefs /dev/sdb1

DEVICE OFFSET TYPE UUID LABEL

sdb1 0x218 LVM2_member dgHM6z-RquE-9xxx-ZEKn-ZxRZ-T04J-QpnT7a

this would help?

wipefs --all --force --backup /dev/sdb

root@pve:~# wipefs /dev/sdb

DEVICE OFFSET TYPE UUID LABEL

sdb 0x1fe dos

root@pve:~# wipefs /dev/sdb1

DEVICE OFFSET TYPE UUID LABEL

sdb1 0x218 LVM2_member dgHM6z-RquE-9xxx-ZEKn-ZxRZ-T04J-QpnT7a

this would help?

wipefs --all --force --backup /dev/sdb

Checking / Repairing etc...

shows your lvm volumes as a tree.

shows the volumes and tpes with disks that are used

shows a bit more detaily, the disks, with volumes on them

Physical Volume = PV

Volume group = VG

no output = all perfect

you get only output on errors.

-d = debug (only on errors)

Checks for new lvm groups/columes

sets a Volume "active" if it's inactive. (you can't access an inactive volume even for fsck)

Only checks for errors

Force check + force correct issues.

------

Deleting:

Sets an VG offline "inactive"

Delete the Volume Group

Delete the Physical Volume

-> n = new gpt partition table

-> w = Write

Hope that helps.

Cheers

lsblkshows your lvm volumes as a tree.

lvs --segments -o +devicesshows the volumes and tpes with disks that are used

pvdisplay -m /dev/sdb1 (Optionally you can pass a drive too)shows a bit more detaily, the disks, with volumes on them

pvs -o pv_name,vg_namePhysical Volume = PV

Volume group = VG

vgck -d VGno output = all perfect

you get only output on errors.

-d = debug (only on errors)

lvscanChecks for new lvm groups/columes

lvchange -ay /dev/VG/...sets a Volume "active" if it's inactive. (you can't access an inactive volume even for fsck)

fsck /dev/VG/...Only checks for errors

fsck -fy /dev/VG/...Force check + force correct issues.

------

Deleting:

vgchange -an VGSets an VG offline "inactive"

vgremove VGDelete the Volume Group

pvremove PVDelete the Physical Volume

gdisk /dev/DISK...-> n = new gpt partition table

-> w = Write

Hope that helps.

Cheers

before I delete it:

root@pve:~# vgchange -an bigdata

0 logical volume(s) in volume group "bigdata" now active

root@pve:~# fsck.ext2 /dev/bigdata

e2fsck 1.46.2 (28-Feb-2021)

fsck.ext2: Is a directory while trying to open /dev/bigdata

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

root@pve:~# e2fsck -b 8193 /dev/sdb1

e2fsck 1.46.2 (28-Feb-2021)

e2fsck: Bad magic number in super-block while trying to open /dev/sdb1

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

/dev/sdb1 contains a LVM2_member file system

what next?

root@pve:~# vgchange -an bigdata

0 logical volume(s) in volume group "bigdata" now active

root@pve:~# fsck.ext2 /dev/bigdata

e2fsck 1.46.2 (28-Feb-2021)

fsck.ext2: Is a directory while trying to open /dev/bigdata

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

root@pve:~# e2fsck -b 8193 /dev/sdb1

e2fsck 1.46.2 (28-Feb-2021)

e2fsck: Bad magic number in super-block while trying to open /dev/sdb1

The superblock could not be read or does not describe a valid ext2/ext3/ext4

filesystem. If the device is valid and it really contains an ext2/ext3/ext4

filesystem (and not swap or ufs or something else), then the superblock

is corrupt, and you might try running e2fsck with an alternate superblock:

e2fsck -b 8193 <device>

or

e2fsck -b 32768 <device>

/dev/sdb1 contains a LVM2_member file system

what next?

I've done it under proxmox shell, not from a livecd? Is it a problem?Meh, read again the previous post and find your error xD

And additional to that, dunno why you want an ext2 check on a inactive vg xD

I've made inactive the vg because otherwise when I execute e2fsck -b 32768 /dev/sdb1 or fsck -fy /dev/sdb1 it said /dev/sdb1 is in use.

Should I try it with a livecd?

I also don't know the filesystem lvm use. fdisk says ID 83 Type Linux, but it could be ex2/ext3/ext4. e2fsck is good for all of them?

What are you trying to do?

You can't run an fsck.ext2 on an raw lvm disk. It doesn't contain an ext2/3/4 partition. It won't work.

Maybe that is confusing, the 3 dots means a volume that contains something.

In your case something like

But I don't know if that even will work, cause vm-105-disk-0 is raw and that isn't ext etc... It's probably ntfs under it, but i don't believe fsck.ntfs will work on that either.

I wrote that in case someone checks filesystems, cause you can have, or in most cases you have an filesystem in an lvm volume. But not in our vm image case with proxmox.

So in short, fsck can't work.

But you could mount that raw disk volume if it helps you and probably access data on them. Google for "kpartx lvm raw"

You can't run an fsck.ext2 on an raw lvm disk. It doesn't contain an ext2/3/4 partition. It won't work.

fsck /dev/VG/...Maybe that is confusing, the 3 dots means a volume that contains something.

In your case something like

fsck /dev/bigdata/vm-105-disk-0But I don't know if that even will work, cause vm-105-disk-0 is raw and that isn't ext etc... It's probably ntfs under it, but i don't believe fsck.ntfs will work on that either.

I wrote that in case someone checks filesystems, cause you can have, or in most cases you have an filesystem in an lvm volume. But not in our vm image case with proxmox.

So in short, fsck can't work.

But you could mount that raw disk volume if it helps you and probably access data on them. Google for "kpartx lvm raw"

all is come from this:

WARNING: gpt signature detected on /dev/bigdata/vm-105-disk-0 at offset 512. Wipe it? [y/n]: [n]

Aborted wiping of gpt.

1 existing signature left on the device.

Failed to wipe signatures on logical volume bigdata/vm-105-disk-0.

TASK ERROR: unable to create VM 105 - lvcreate 'bigdata/vm-105-disk-0' error: Aborting. Failed to wipe start of new LV.

where the number after vm could be 123, 106, 200 all non existing vm, but the message the same and can't create new vm.

This message '1 existing signature left on the device' confused me, that some garbage left on my lvm blocking me to ceate new vm image.

I've thought if I could 'repair' my lvm, I could create vm images again.

It's an 1TB disk more than 600GB vm images I couldn't backup right now, and don't want to loose either.

Even if I could back them up, I'm not sure than after wiping out the entire disk and create lvm again, and copying back the vms could work again.

WARNING: gpt signature detected on /dev/bigdata/vm-105-disk-0 at offset 512. Wipe it? [y/n]: [n]

Aborted wiping of gpt.

1 existing signature left on the device.

Failed to wipe signatures on logical volume bigdata/vm-105-disk-0.

TASK ERROR: unable to create VM 105 - lvcreate 'bigdata/vm-105-disk-0' error: Aborting. Failed to wipe start of new LV.

where the number after vm could be 123, 106, 200 all non existing vm, but the message the same and can't create new vm.

This message '1 existing signature left on the device' confused me, that some garbage left on my lvm blocking me to ceate new vm image.

I've thought if I could 'repair' my lvm, I could create vm images again.

It's an 1TB disk more than 600GB vm images I couldn't backup right now, and don't want to loose either.

Even if I could back them up, I'm not sure than after wiping out the entire disk and create lvm again, and copying back the vms could work again.

I could create a new vm with SeaBios, i440fx, and an IDE 32GB HDD, then I could change settings to OVMF, q35. and I could add an UEFI disk to it. But when I want to create a new harddisk with virtio (or sata) this message appeared:

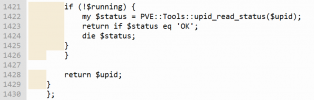

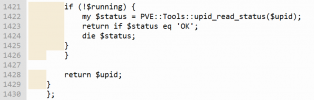

lvcreate 'bigdata/vm-105-disk-2' error: Aborting. Failed to wipe start of new LV. at /usr/share/perl5/PVE/API2/Qemu.pm line 1424. (500)

what does it means?

(update)

lvcreate 'bigdata/vm-105-disk-2' error: Aborting. Failed to wipe start of new LV. at /usr/share/perl5/PVE/API2/Qemu.pm line 1424. (500)

what does it means?

(update)

Last edited:

root@pve:~# pvesm alloc BigData 105 '' 256G

WARNING: gpt signature detected on /dev/bigdata/vm-105-disk-2 at offset 512. Wipe it? [y/n]: [n]

Aborted wiping of gpt.

1 existing signature left on the device.

Failed to wipe signatures on logical volume bigdata/vm-105-disk-2.

lvcreate 'bigdata/vm-105-disk-2' error: Aborting. Failed to wipe start of new LV.

root@pve:~# qm set 105 --virtio0 BigData:vm-105-disk-2,cache=writeback

update VM 105: -virtio0 BigData:vm-105-disk-2,cache=writeback

can't activate LV '/dev/bigdata/vm-105-disk-2': Failed to find logical volume "bigdata/vm-105-disk-2"

WARNING: gpt signature detected on /dev/bigdata/vm-105-disk-2 at offset 512. Wipe it? [y/n]: [n]

Aborted wiping of gpt.

1 existing signature left on the device.

Failed to wipe signatures on logical volume bigdata/vm-105-disk-2.

lvcreate 'bigdata/vm-105-disk-2' error: Aborting. Failed to wipe start of new LV.

root@pve:~# qm set 105 --virtio0 BigData:vm-105-disk-2,cache=writeback

update VM 105: -virtio0 BigData:vm-105-disk-2,cache=writeback

can't activate LV '/dev/bigdata/vm-105-disk-2': Failed to find logical volume "bigdata/vm-105-disk-2"

apt-get install kpartxlvscanmkdir -p /mnt/vm105mount /dev/bigdata/vm-105-disk-0 /mnt/vm105try this first, maybe it works

then you can backup your data.

if it doesnt work, then there is another way with kpartx loopback device or something like that. i never used kpartx myself

Well, I've found a solution (sort of...)

As I wrote before, I could create a new vm, but only with IDE interface. Then I've found a solution in the forum to change interface from IDE to virtio, with detaching, and editing the created 32G IDE disk. Then I've found a solution to increase size with terminal:

So now, I've reached my goal to create a new vm with an 256G virtio disk.

My bigdata volume is almost full, so the question is if i'll bored one of the existed vm and will want to create a new, what will happen

But at least I have a solution of it, even it is not so elegant. Also I don't have to backup my vm's and erase/recreate lvm volume, and copy vm's back.

As I wrote before, I could create a new vm, but only with IDE interface. Then I've found a solution in the forum to change interface from IDE to virtio, with detaching, and editing the created 32G IDE disk. Then I've found a solution to increase size with terminal:

Code:

qm resize 105 virtio0 +224GSo now, I've reached my goal to create a new vm with an 256G virtio disk.

My bigdata volume is almost full, so the question is if i'll bored one of the existed vm and will want to create a new, what will happen

But at least I have a solution of it, even it is not so elegant. Also I don't have to backup my vm's and erase/recreate lvm volume, and copy vm's back.

Last edited:

Have you ever figured this out?

I have a similar problem.

I have multiple vms set up on a second drive. (sdb)

If I sent up a windows vm and then remove it. and try to create any new VM I get and error

............

WARNING: dos signature detected on /dev/local-2/vm-101-disk-0 at offset 510. Wipe it? [y/n]: [n]

Aborted wiping of dos.

1 existing signature left on the device.

Failed to wipe signatures on logical volume local-2/vm-101-disk-0.

TASK ERROR: unable to create VM 101 - lvcreate 'local-2/vm-101-disk-0' error: Aborting. Failed to wipe start of new LV.

...............

This is the 2nd time I ran into this problem.

They only way I was able to fix it the first time was remove all the vms and remove the volume group and physical group format and start over from scratch.

I have a similar problem.

I have multiple vms set up on a second drive. (sdb)

If I sent up a windows vm and then remove it. and try to create any new VM I get and error

............

WARNING: dos signature detected on /dev/local-2/vm-101-disk-0 at offset 510. Wipe it? [y/n]: [n]

Aborted wiping of dos.

1 existing signature left on the device.

Failed to wipe signatures on logical volume local-2/vm-101-disk-0.

TASK ERROR: unable to create VM 101 - lvcreate 'local-2/vm-101-disk-0' error: Aborting. Failed to wipe start of new LV.

...............

This is the 2nd time I ran into this problem.

They only way I was able to fix it the first time was remove all the vms and remove the volume group and physical group format and start over from scratch.

it looks like the proxmox scripts are aborting when a warning is presented.

The warnings are that a partition signature is detected.

I dont know what a good solution would be, the thoughts in my head are manually wipe the disk from command line and just say yes to the warning prompt. But sitting at my desk without doing this process I dont know if that would break proxmox interaction with LVM.

The warnings are that a partition signature is detected.

I dont know what a good solution would be, the thoughts in my head are manually wipe the disk from command line and just say yes to the warning prompt. But sitting at my desk without doing this process I dont know if that would break proxmox interaction with LVM.