Hi support / community members,

Since not long ago I've migrated from PVE v7 to v8. Everything seemed fine since a couple of days ago. Now only a part of my 19 total nodes are willing to communicate with the cluster owner and rejoin the cluster.

...

output for `pvecm status`:

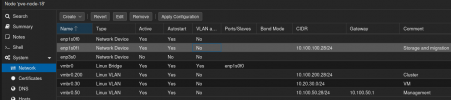

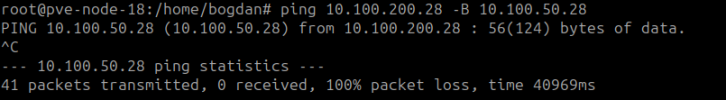

I know about the different cluster links used by the members. I'm planning to switch them all to 10.100.200.1/24 so that the cluster network traffic is completely separated.

pve-cluster service status on cluster owner:

corosync service status on cluster owner:

I would really need some guidance on how to troubleshoot and identify my communication issues.

Thanks in advance,

Bogdan

Since not long ago I've migrated from PVE v7 to v8. Everything seemed fine since a couple of days ago. Now only a part of my 19 total nodes are willing to communicate with the cluster owner and rejoin the cluster.

...

Code:

proxmox-ve: 8.0.1 (running kernel: 6.2.16-3-pve)

pve-manager: 8.0.3 (running version: 8.0.3/bbf3993334bfa916)

pve-kernel-6.2: 8.0.2

pve-kernel-6.2.16-3-pve: 6.2.16-3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx2

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.3

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.5

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.3

libpve-rs-perl: 0.8.3

libpve-storage-perl: 8.0.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 2.99.0-1

proxmox-backup-file-restore: 2.99.0-1

proxmox-kernel-helper: 8.0.2

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.0.5

pve-cluster: 8.0.1

pve-container: 5.0.3

pve-docs: 8.0.3

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.2

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.4

pve-qemu-kvm: 8.0.2-3

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1output for `pvecm status`:

Code:

Cluster information

-------------------

Name: pve-cluster-is

Config Version: 33

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Wed Aug 23 15:59:27 2023

Quorum provider: corosync_votequorum

Nodes: 10

Node ID: 0x00000001

Ring ID: 1.701b

Quorate: Yes

Votequorum information

----------------------

Expected votes: 19

Highest expected: 19

Total votes: 10

Quorum: 10

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.100.200.23 (local)

0x00000003 1 10.100.50.21

0x00000004 1 10.100.50.24

0x00000005 1 10.100.50.20

0x00000006 1 10.100.50.22

0x00000008 1 10.100.200.11

0x00000009 1 10.100.50.12

0x0000000d 1 10.100.50.17

0x0000000e 1 10.100.50.18

0x00000013 1 10.100.50.29I know about the different cluster links used by the members. I'm planning to switch them all to 10.100.200.1/24 so that the cluster network traffic is completely separated.

pve-cluster service status on cluster owner:

Code:

root@pve-node-13:/etc/corosync# systemctl status pve-cluster

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; preset: enabled)

Active: active (running) since Wed 2023-08-23 09:38:21 EEST; 6h ago

Process: 4786 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 4803 (pmxcfs)

Tasks: 9 (limit: 154209)

Memory: 72.6M

CPU: 23.362s

CGroup: /system.slice/pve-cluster.service

└─4803 /usr/bin/pmxcfs

Aug 23 16:32:40 pve-node-13 pmxcfs[4803]: [status] notice: starting data syncronisation

Aug 23 16:32:40 pve-node-13 pmxcfs[4803]: [dcdb] notice: received sync request (epoch 1/4803/00000098)

Aug 23 16:32:40 pve-node-13 pmxcfs[4803]: [status] notice: received sync request (epoch 1/4803/0000006F)

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: received all states

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: leader is 1/4803

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: synced members: 1/4803, 3/2329, 4/2394, 5/2289, 6/2323, 8/29550, 9/145555, 13/3678203, 14/3552, 19/2421

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: start sending inode updates

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: sent all (0) updates

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: all data is up to date

Aug 23 16:33:11 pve-node-13 pmxcfs[4803]: [dcdb] notice: dfsm_deliver_queue: queue length 6corosync service status on cluster owner:

Code:

root@pve-node-13:/etc/corosync# systemctl status corosync

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; preset: enabled)

Active: active (running) since Wed 2023-08-23 09:38:22 EEST; 6h ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 4921 (corosync)

Tasks: 9 (limit: 154209)

Memory: 1.6G

CPU: 2h 25min 31.873s

CGroup: /system.slice/corosync.service

└─4921 /usr/sbin/corosync -f

Aug 23 16:34:57 pve-node-13 corosync[4921]: [TOTEM ] Token has not been received in 17729 ms

Aug 23 16:34:57 pve-node-13 corosync[4921]: [KNET ] link: host: 18 link: 0 is down

Aug 23 16:34:57 pve-node-13 corosync[4921]: [KNET ] host: host: 18 (passive) best link: 0 (pri: 1)

Aug 23 16:34:57 pve-node-13 corosync[4921]: [KNET ] host: host: 18 has no active links

Aug 23 16:35:08 pve-node-13 corosync[4921]: [KNET ] rx: host: 18 link: 0 is up

Aug 23 16:35:08 pve-node-13 corosync[4921]: [KNET ] link: Resetting MTU for link 0 because host 18 joined

Aug 23 16:35:08 pve-node-13 corosync[4921]: [KNET ] host: host: 18 (passive) best link: 0 (pri: 1)

Aug 23 16:35:12 pve-node-13 corosync[4921]: [KNET ] rx: host: 17 link: 0 is up

Aug 23 16:35:12 pve-node-13 corosync[4921]: [KNET ] link: Resetting MTU for link 0 because host 17 joined

Aug 23 16:35:12 pve-node-13 corosync[4921]: [KNET ] host: host: 17 (passive) best link: 0 (pri: 1)I would really need some guidance on how to troubleshoot and identify my communication issues.

Thanks in advance,

Bogdan