Hi Mates!

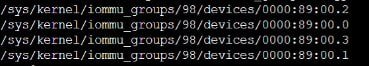

We are facing a problem with any of the GPU on pass-thru when starting a new VM give up. not changing the power state of any PCI at boot.

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.0: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.0: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.1: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.2: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.3: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:52 ODIN kernel: pcieport 0000:89:00.0: not ready 1023ms after bus reset; waiting

Mar 28 17:55:53 ODIN kernel: pcieport 0000:89:00.0: not ready 2047ms after bus reset; waiting

Mar 28 17:55:55 ODIN kernel: pcieport 0000:89:00.0: not ready 4095ms after bus reset; waiting

Mar 28 17:56:00 ODIN kernel: pcieport 0000:89:00.0: not ready 8191ms after bus reset; waiting

Mar 28 17:56:08 ODIN kernel: pcieport 0000:89:00.0: not ready 16383ms after bus reset; waiting

Mar 28 17:56:25 ODIN kernel: pcieport 0000:89:00.0: not ready 32767ms after bus reset; waiting

Mar 28 17:56:49 ODIN pmxcfs[2832]: [status] notice: received log

Mar 28 17:56:59 ODIN kernel: pcieport 0000:89:00.0: not ready 65535ms after bus reset; giving up

Mar 28 17:56:59 ODIN kernel: vfio-pci 0000:89:00.0: invalid power transition (from D3cold to D3hot)

any advice?

thanks in advance

angel

We are facing a problem with any of the GPU on pass-thru when starting a new VM give up. not changing the power state of any PCI at boot.

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.0: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.0: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.1: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.2: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:50 ODIN kernel: vfio-pci 0000:89:00.3: can't change power state from D3cold to D0 (config space inaccessible)

Mar 28 17:55:52 ODIN kernel: pcieport 0000:89:00.0: not ready 1023ms after bus reset; waiting

Mar 28 17:55:53 ODIN kernel: pcieport 0000:89:00.0: not ready 2047ms after bus reset; waiting

Mar 28 17:55:55 ODIN kernel: pcieport 0000:89:00.0: not ready 4095ms after bus reset; waiting

Mar 28 17:56:00 ODIN kernel: pcieport 0000:89:00.0: not ready 8191ms after bus reset; waiting

Mar 28 17:56:08 ODIN kernel: pcieport 0000:89:00.0: not ready 16383ms after bus reset; waiting

Mar 28 17:56:25 ODIN kernel: pcieport 0000:89:00.0: not ready 32767ms after bus reset; waiting

Mar 28 17:56:49 ODIN pmxcfs[2832]: [status] notice: received log

Mar 28 17:56:59 ODIN kernel: pcieport 0000:89:00.0: not ready 65535ms after bus reset; giving up

Mar 28 17:56:59 ODIN kernel: vfio-pci 0000:89:00.0: invalid power transition (from D3cold to D3hot)

any advice?

thanks in advance

angel