hi,

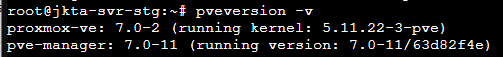

need help after upgraded the proxmox version from 6 to 7 when I created a new VM it's failed to start with the error below

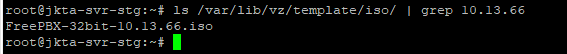

the Iso file is in place:

thanks in advance

need help after upgraded the proxmox version from 6 to 7 when I created a new VM it's failed to start with the error below

Code:

kvm: -drive file=/var/lib/vz/template/iso/FreePBX-32bit-10.13.66.iso,if=none,id=drive-ide2,media=cdrom,aio=io_uring: Unable to use io_uring: failed to init linux io_uring ring: Function not implemented

TASK ERROR: start failed: QEMU exited with code 1the Iso file is in place:

thanks in advance