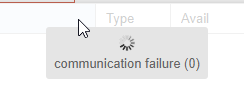

Any updates to this topic. I'm having what appears to be the same problem. Proxmox is just about useless as I can't add any new machines or do anything requiring storage as it times out with the 596.

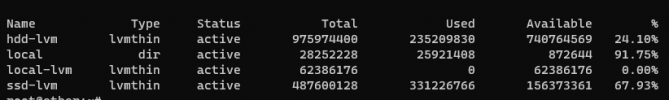

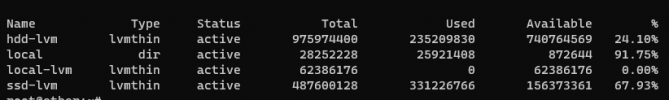

pvesm status 9 out of 10 times take a very long time to run. 1 in 10 times it's nearly instant.

I notice from Datacenter View Storage it's the CT items that time out.

Since New Years I've been evaluating 6 different Virtualization solutions and I've had to rebuild 3 times previously as Proxmox VE seems to develop some kind of issue that all but halts use. Only one other required a rebuild, XCP-ng required a reinstall as it was easier to start fresh with clusters that way. Haven't even gotten to Ceph or clusters yet with Proxmox.

Prior to this issue I thought everything was looking good but shortly after adding Turnkey containers and the latest updates it went down hill again. I personally really like the way ProxMox VE works and it seems to have the best core power features specifically for virtulization. But it seems to be quirky and have lots of "cosmetic errors". That's kind of a problem because when a real problem shows up and your looking at logs and come across these other "cosmetic errors" you can easily start chasing a problem that doesn't exist. That's probably at least partially responsible for previous rebuilds. Other strange things when testing ZFS pools is it randomly drops Drive IDs (still properly setup) for parts of the pool in vdev0 & 1. Worse is the first drive in vdev 2 wasn't setup in that vdev but was a spare so after replacing should have went back to spare duty. This has only happened that I've saw with odd numbered drives in the vdev. I've been testing all kinds of different pool setups from 4 way mirror stacked 30 high to raidz3 15 drives wide and stacked 7 high. ZFS ran as part of ProxMox seems to hit a performance wall with about 30 drives to 40 spinning rust drives even with 3 SAS controllers being used with 40 cores from 2 XEON Golds and 128GB memory available with no containers or VMs running.

View attachment 36936

I don't know if it's kernel tuning or what, but same pools imported to a Debian Bullseye with ZFS installed continue to get faster with additional vdevs added. I originally thought it was just me trying to adapt to VE but I'm not having these issues elsewhere. Next week I'm going to try another Lab computer SuperMicro FatTwin with 8 XEONs and 150 or so SAS drives to see if maybe it just doesn't like the Dell Servers.

Finally decided it was time to post and give a very brief synopsis and of course see if there is any movement on this issue since it's a show stopper for me right now.

Thanks,

Carlo