Solved: This was solved by giving up and rebuilding the entire cluster.

I wanted to upgrade all of my 7.1.7 to 7.2 but one node is not allowing migration.

I tried while it was still 7.1.7 and got the errors so I upgraded to 7.2 with the guests on it and no difference after a reboot.

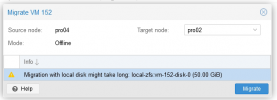

2022-08-29 18:33:59 starting migration of VM 152 to node 'pro02' (10.0.0.71)

2022-08-29 18:33:59 found local disk 'local-zfs:vm-152-disk-0' (in current VM config)

2022-08-29 18:33:59 copying local disk images

Use of uninitialized value $target_storeid in string eq at /usr/share/perl5/PVE/Storage.pm line 776.

Use of uninitialized value $targetsid in concatenation (.) or string at /usr/share/perl5/PVE/QemuMigrate.pm line 565.

2022-08-29 18:33:59 ERROR: storage migration for 'local-zfs:vm-152-disk-0' to storage '' failed - no storage ID specified

2022-08-29 18:33:59 aborting phase 1 - cleanup resources

2022-08-29 18:33:59 ERROR: migration aborted (duration 00:00:00): storage migration for 'local-zfs:vm-152-disk-0' to storage '' failed - no storage ID specified

TASK ERROR: migration aborted

2022-08-29 17:51:41 starting migration of VM 323 to node 'pro03' (10.0.0.72)

2022-08-29 17:51:42 found local disk 'local-zfs:vm-323-disk-0' (in current VM config)

2022-08-29 17:51:42 found local disk 'local-zfs:vm-323-disk-1' (in current VM config)

2022-08-29 17:51:42 copying local disk images

Use of uninitialized value $target_storeid in string eq at /usr/share/perl5/PVE/Storage.pm line 776.

Use of uninitialized value $targetsid in concatenation (.) or string at /usr/share/perl5/PVE/QemuMigrate.pm line 565.

2022-08-29 17:51:42 ERROR: storage migration for 'local-zfs:vm-323-disk-0' to storage '' failed - no storage ID specified

2022-08-29 17:51:42 aborting phase 1 - cleanup resources

2022-08-29 17:51:42 ERROR: migration aborted (duration 00:00:01): storage migration for 'local-zfs:vm-323-disk-0' to storage '' failed - no storage ID specified

TASK ERROR: migration aborted

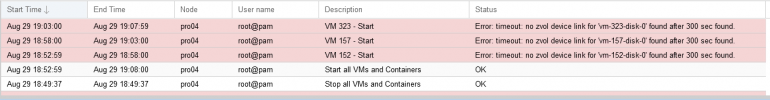

After reboot, they won't start either. No zvol device?

# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 3.62T 48.7G 3.58T - - 2% 1% 1.00x ONLINE -

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 35.4G 2.52T 151K /rpool

rpool/ROOT 35.3G 2.52T 140K /rpool/ROOT

rpool/ROOT/pve-1 35.3G 2.52T 35.3G /

rpool/data 140K 2.52T 140K /rpool/data

# zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 00:16:48 with 0 errors on Sun Aug 14 00:40:50 2022

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

scsi-35000c50059222917-part3 ONLINE 0 0 0

scsi-35000c500628f656f-part3 ONLINE 0 0 0

scsi-35000c500565777eb-part3 ONLINE 0 0 0

scsi-35000c500565353db-part3 ONLINE 0 0 0

errors: No known data errors

Lot's of posts found and read but I don't want to break this setup so can anyone provide any correct solutions or link to an article, something that is not 'try this'.

I wanted to upgrade all of my 7.1.7 to 7.2 but one node is not allowing migration.

I tried while it was still 7.1.7 and got the errors so I upgraded to 7.2 with the guests on it and no difference after a reboot.

2022-08-29 18:33:59 starting migration of VM 152 to node 'pro02' (10.0.0.71)

2022-08-29 18:33:59 found local disk 'local-zfs:vm-152-disk-0' (in current VM config)

2022-08-29 18:33:59 copying local disk images

Use of uninitialized value $target_storeid in string eq at /usr/share/perl5/PVE/Storage.pm line 776.

Use of uninitialized value $targetsid in concatenation (.) or string at /usr/share/perl5/PVE/QemuMigrate.pm line 565.

2022-08-29 18:33:59 ERROR: storage migration for 'local-zfs:vm-152-disk-0' to storage '' failed - no storage ID specified

2022-08-29 18:33:59 aborting phase 1 - cleanup resources

2022-08-29 18:33:59 ERROR: migration aborted (duration 00:00:00): storage migration for 'local-zfs:vm-152-disk-0' to storage '' failed - no storage ID specified

TASK ERROR: migration aborted

2022-08-29 17:51:41 starting migration of VM 323 to node 'pro03' (10.0.0.72)

2022-08-29 17:51:42 found local disk 'local-zfs:vm-323-disk-0' (in current VM config)

2022-08-29 17:51:42 found local disk 'local-zfs:vm-323-disk-1' (in current VM config)

2022-08-29 17:51:42 copying local disk images

Use of uninitialized value $target_storeid in string eq at /usr/share/perl5/PVE/Storage.pm line 776.

Use of uninitialized value $targetsid in concatenation (.) or string at /usr/share/perl5/PVE/QemuMigrate.pm line 565.

2022-08-29 17:51:42 ERROR: storage migration for 'local-zfs:vm-323-disk-0' to storage '' failed - no storage ID specified

2022-08-29 17:51:42 aborting phase 1 - cleanup resources

2022-08-29 17:51:42 ERROR: migration aborted (duration 00:00:01): storage migration for 'local-zfs:vm-323-disk-0' to storage '' failed - no storage ID specified

TASK ERROR: migration aborted

After reboot, they won't start either. No zvol device?

# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 3.62T 48.7G 3.58T - - 2% 1% 1.00x ONLINE -

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 35.4G 2.52T 151K /rpool

rpool/ROOT 35.3G 2.52T 140K /rpool/ROOT

rpool/ROOT/pve-1 35.3G 2.52T 35.3G /

rpool/data 140K 2.52T 140K /rpool/data

# zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 00:16:48 with 0 errors on Sun Aug 14 00:40:50 2022

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

scsi-35000c50059222917-part3 ONLINE 0 0 0

scsi-35000c500628f656f-part3 ONLINE 0 0 0

scsi-35000c500565777eb-part3 ONLINE 0 0 0

scsi-35000c500565353db-part3 ONLINE 0 0 0

errors: No known data errors

Lot's of posts found and read but I don't want to break this setup so can anyone provide any correct solutions or link to an article, something that is not 'try this'.

Last edited: