I have four nodes with LAN addresses 192.168.131.1, .2, .3 and .4 . These are all configured identically except for the difference of the last octet of an ip address. So NodeA has .1, B has .2, C has .4 and so on.

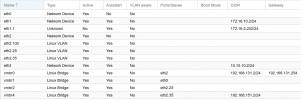

NodeB for example:

vmbr0 is the LAN and it's is also the LAN on a pfSense guest. vmbr1 is the internet gateway. This allows that pfSense guest to run on any node and still provide both internet and and access to whatever else pfSense exposed access to. I also access the pfSense guest via OpenVPN for remote access.

Since Sunday night however , seemingly out of the blue, NodeB is not able to reach pfSense anymore and neither can pfSense reach NodeB. However, nothing of that sorts is wrong on NodeA, C or D. Also, I can reach NodeB from Node A, C and D.

pfSense can reach NodeA, C and D and all the guests running on there, but nothing running on Node B (that's sort of obvious, since it can't reach NodeB's vmbr0 bridge. However, pSense only "knows" about the LAN bridge to the 192.168.131.0/24 network via vmbr0. So with no specific knowledge of Node B, how it is possible that Node B is offline and A, C and D are fine.

I have a second pfSense guest (as a CARP failover), which displays the exact same behaviour. The who have lan addresses 192.168.131.252 (primary) and 192.168.131.253 (backup). They can ping each other on those addresses, but neither can ping 192.168.131.2 (node B's LAN bridge). It is almost as if there's a ip address blocker somewhere. If only one firewall displayed this behaviour I would have suspected that something went wrong in pfSense, but now both to the same thing. This leads me to conclude that the problem is most likely on Node B.

Is there anything in proxmox that could be blocking traffic? No, the proxmox firewall is not being used.

Any suggestions on how I can find the cause of this?

PS. I also paged through the syslog. One moment all seems fine and then the next the Proxmox Backup Server cannot be reached (timeout). No other indication of a problem.

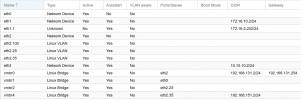

NodeB for example:

vmbr0 is the LAN and it's is also the LAN on a pfSense guest. vmbr1 is the internet gateway. This allows that pfSense guest to run on any node and still provide both internet and and access to whatever else pfSense exposed access to. I also access the pfSense guest via OpenVPN for remote access.

Since Sunday night however , seemingly out of the blue, NodeB is not able to reach pfSense anymore and neither can pfSense reach NodeB. However, nothing of that sorts is wrong on NodeA, C or D. Also, I can reach NodeB from Node A, C and D.

pfSense can reach NodeA, C and D and all the guests running on there, but nothing running on Node B (that's sort of obvious, since it can't reach NodeB's vmbr0 bridge. However, pSense only "knows" about the LAN bridge to the 192.168.131.0/24 network via vmbr0. So with no specific knowledge of Node B, how it is possible that Node B is offline and A, C and D are fine.

I have a second pfSense guest (as a CARP failover), which displays the exact same behaviour. The who have lan addresses 192.168.131.252 (primary) and 192.168.131.253 (backup). They can ping each other on those addresses, but neither can ping 192.168.131.2 (node B's LAN bridge). It is almost as if there's a ip address blocker somewhere. If only one firewall displayed this behaviour I would have suspected that something went wrong in pfSense, but now both to the same thing. This leads me to conclude that the problem is most likely on Node B.

Is there anything in proxmox that could be blocking traffic? No, the proxmox firewall is not being used.

Any suggestions on how I can find the cause of this?

PS. I also paged through the syslog. One moment all seems fine and then the next the Proxmox Backup Server cannot be reached (timeout). No other indication of a problem.

Last edited: