Hi guys!

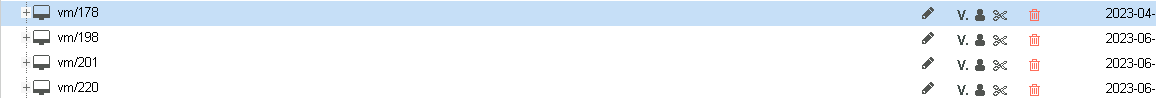

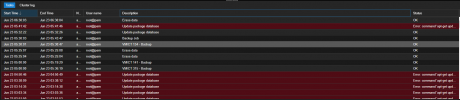

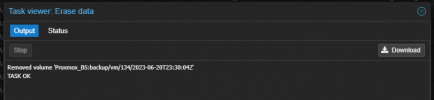

I've noticed a problem that tasks created in Proxmox VE 7.4 are not executed on schedule. We have moved the storage with backups with a change of ip address and re-mounted to the server (the mount point did not change) Since then the tasks are not executed on schedule, although the manual launch of the tasks is performed without errors.

UPD. At the moment I've started updating to PVE 7.4-13

UPD. some of backups execution schedule works fine. I tried expecting syslog but there is no information about any errors or something else. Tried ask in thread of PBS, but there is no answers

I checked syslog at the time when backup have to start, but there is no information.

I've noticed a problem that tasks created in Proxmox VE 7.4 are not executed on schedule. We have moved the storage with backups with a change of ip address and re-mounted to the server (the mount point did not change) Since then the tasks are not executed on schedule, although the manual launch of the tasks is performed without errors.

UPD. At the moment I've started updating to PVE 7.4-13

UPD. some of backups execution schedule works fine. I tried expecting syslog but there is no information about any errors or something else. Tried ask in thread of PBS, but there is no answers

Code:

# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.102-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.3-3

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

ceph: 16.2.11-pve1

ceph-fuse: 16.2.11-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-2

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-3

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

openvswitch-switch: 2.15.0+ds1-2+deb11u2.1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.1-1

proxmox-widget-toolkit: 3.6.4

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-1

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.11-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1I checked syslog at the time when backup have to start, but there is no information.

Code:

Jun 13 14:42:04 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:02 bf01pve04 pvedaemon[3084903]: <root@pam> successful auth for user 'root@pam'

Jun 13 14:45:02 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:02 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:02 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:02 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:03 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:03 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:04 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:05 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:05 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:05 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:08 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:10 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:45:13 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:46:52 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:46:52 bf01pve04 pmxcfs[1429]: [status] notice: received log

Jun 13 14:46:52 bf01pve04 systemd[1]: Created slice User Slice of UID 0.

Jun 13 14:46:52 bf01pve04 systemd[1]: Starting User Runtime Directory /run/user/0...

Jun 13 14:46:52 bf01pve04 systemd[1]: Finished User Runtime Directory /run/user/0.

Jun 13 14:46:52 bf01pve04 systemd[1]: Starting User Manager for UID 0...

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Queued start job for default target Main User Target.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Created slice User Application Slice.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Reached target Paths.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Reached target Timers.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Starting D-Bus User Message Bus Socket.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on GnuPG network certificate management daemon.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on GnuPG cryptographic agent and passphrase cache (access for web browsers).

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on GnuPG cryptographic agent and passphrase cache (restricted).

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on GnuPG cryptographic agent (ssh-agent emulation).

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on GnuPG cryptographic agent and passphrase cache.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Listening on D-Bus User Message Bus Socket.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Reached target Sockets.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Reached target Basic System.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Reached target Main User Target.

Jun 13 14:46:52 bf01pve04 systemd[3130027]: Startup finished in 129ms.

Jun 13 14:46:52 bf01pve04 systemd[1]: Started User Manager for UID 0.

Jun 13 14:46:52 bf01pve04 systemd[1]: Started Session 2721 of user root.

Last edited: