I set up a VM to use as a samba server, but I forgot to specify that the attached disks were not to be part of the VM backup.

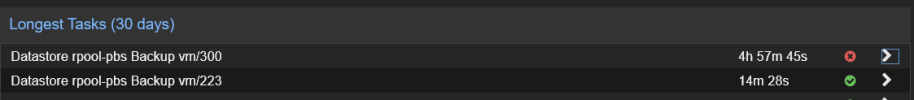

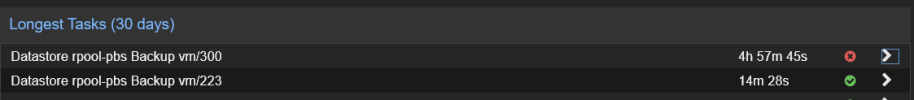

So the VM backup ran last night and proceeded to fill up the Proxmox Backup Server datastore. There is no record of the backup for that container.

I've looked for how I can remove the backup manually, but it does not seem to exist, nonetheless the datastore shows as 100% full.

How can I resolve this without having to wipe the datastore and recreate?

Some additional info:

The offending VM is ID 300, but there is no record of it in the datastore:

Log from botched backup job:

So the VM backup ran last night and proceeded to fill up the Proxmox Backup Server datastore. There is no record of the backup for that container.

I've looked for how I can remove the backup manually, but it does not seem to exist, nonetheless the datastore shows as 100% full.

How can I resolve this without having to wipe the datastore and recreate?

Some additional info:

The offending VM is ID 300, but there is no record of it in the datastore:

Log from botched backup job:

2024-12-28T01:05:51-07:00: GET /previous: 400 Bad Request: no valid previous backup

2024-12-28T01:05:51-07:00: created new fixed index 1 ("ns/main/vm/300/2024-12-28T08:05:51Z/drive-scsi0.img.fidx")

2024-12-28T01:05:51-07:00: created new fixed index 2 ("ns/main/vm/300/2024-12-28T08:05:51Z/drive-scsi1.img.fidx")

2024-12-28T01:05:51-07:00: created new fixed index 3 ("ns/main/vm/300/2024-12-28T08:05:51Z/drive-scsi2.img.fidx")

2024-12-28T01:05:51-07:00: add blob "/mnt/datastore/rpool-pbs/ns/main/vm/300/2024-12-28T08:05:51Z/qemu-server.conf.blob" (449 bytes, comp: 449)

2024-12-28T06:03:36-07:00: POST /fixed_chunk: 400 Bad Request: inserting chunk on store 'rpool-pbs' failed for d3de47ca866c3e346db7f1c8d3498ff023957f90f865e13e74437d0fb5c94d19 - mkstemp "/mnt/datastore/rpool-pbs/.chunks/d3de/d3de47ca866c3e346db7f1c8d3498ff023957f90f865e13e74437d0fb5c94d19.tmp_XXXXXX" failed: ENOSPC: No space left on device

2024-12-28T06:03:36-07:00: backup ended and finish failed: backup ended but finished flag is not set.

2024-12-28T06:03:36-07:00: removing unfinished backup

2024-12-28T06:03:36-07:00: removing backup snapshot "/mnt/datastore/rpool-pbs/ns/main/vm/300/2024-12-28T08:05:51Z"

2024-12-28T06:03:36-07:00: TASK ERROR: backup ended but finished flag is not set.

2024-12-28T06:03:36-07:00: POST /fixed_chunk: 400 Bad Request: inserting chunk on store 'rpool-pbs' failed for a6c1dfd01908d7bcafdb02dc7d0b3db6497a412352bd5c20b54b08f7fa4f56d2 - mkstemp "/mnt/datastore/rpool-pbs/.chunks/a6c1/a6c1dfd01908d7bcafdb02dc7d0b3db6497a412352bd5c20b54b08f7fa4f56d2.tmp_XXXXXX" failed: ENOSPC: No space left on device

--- edited for length, but it is more of the same as above ---