Hello,

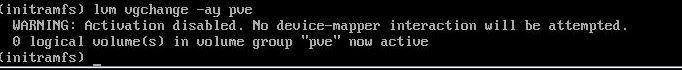

After upgrade to version 7.2-4, when booting up, I am taken to the following screen:

----

I tried to boot with an older kernel version, the system started, but I encounter the following problems when trying to start VM.

pveversion -v:

Can anyone help me with that ?

After upgrade to version 7.2-4, when booting up, I am taken to the following screen:

----

I tried to boot with an older kernel version, the system started, but I encounter the following problems when trying to start VM.

Code:

WARNING: Activation disabled. No device-mapper interaction will be attempted.

WARNING: Activation disabled. No device-mapper interaction will be attempted.

WARNING: Activation disabled. No device-mapper interaction will be attempted.

kvm: -drive file=/mnt/pve/iso_storage/template/iso/virtio-win-0.1.141.iso,if=none,id=drive-ide0,media=cdrom,aio=io_uring: Unable to use io_uring: failed to init linux io_uring ring: Function not implemented

TASK ERROR: start failed: QEMU exited with code 1pveversion -v:

Code:

proxmox-ve: 7.2-1 (running kernel: 4.15.18-2-pve)

pve-manager: 7.2-4 (running version: 7.2-4/ca9d43cc)

pve-kernel-5.15: 7.2-3

pve-kernel-helper: 7.2-3

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.15.7-1-pve: 5.15.7-1

pve-kernel-4.15: 5.4-19

pve-kernel-4.15.18-30-pve: 4.15.18-58

pve-kernel-4.15.18-9-pve: 4.15.18-30

pve-kernel-4.15.18-2-pve: 4.15.18-21

pve-kernel-4.15.17-1-pve: 4.15.17-9

pve-kernel-4.13.13-2-pve: 4.13.13-33

ceph-fuse: 16.2.7

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36+pve1

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.1-8

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-1

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-2

libpve-storage-perl: 7.2-4

libqb0: 1.0.5-1~bpo9+2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.12-1

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.1-1

proxmox-backup-file-restore: 2.2.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-1

pve-container: 4.2-1

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.4-2

pve-ha-manager: 3.3-4

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-7

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1Can anyone help me with that ?