Hello together

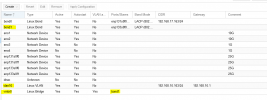

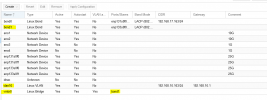

I have a PVE host with a bond interface which I use for management and clustering but also as NIC for the VMs.

In the VM, I use the bridge as a NIC and each has a VLAN tag.

Unfortunately, however, the VM does not get a network connection. What am I doing wrong?

Thank you and best regards

I have a PVE host with a bond interface which I use for management and clustering but also as NIC for the VMs.

In the VM, I use the bridge as a NIC and each has a VLAN tag.

Unfortunately, however, the VM does not get a network connection. What am I doing wrong?

Code:

root@PVE004:~# pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.83-1-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.4: 6.4-15

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.4.174-2-pve: 5.4.174-2

pve-kernel-5.4.143-1-pve: 5.4.143-1

ceph: 16.2.9-pve1

ceph-fuse: 16.2.9-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

Code:

root@PVE004:~# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface eno3 inet manual

#1G

auto eno1

iface eno1 inet manual

#10G

auto eno2

iface eno2 inet manual

#10G

iface eno4 inet manual

#1G

auto enp131s0f0

iface enp131s0f0 inet manual

#25G

auto enp131s0f1

iface enp131s0f1 inet manual

#25G

iface idrac inet manual

auto enp133s0f0

iface enp133s0f0 inet manual

#25G

auto enp133s0f1

iface enp133s0f1 inet manual

#25G

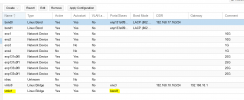

auto bond0

iface bond0 inet static

address 192.168.17.163/24

bond-slaves enp131s0f0 enp131s0f1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

mtu 9000

auto bond1

iface bond1 inet manual

bond-slaves enp133s0f0 enp133s0f1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

mtu 9000

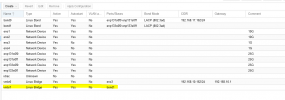

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

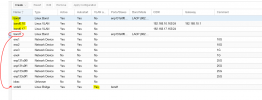

auto vlan10

iface vlan10 inet static

address 192.168.10.163/24

gateway 192.168.10.1

mtu 9000

vlan-raw-device bond1

Code:

root@PVE004:~# cat /etc/pve/nodes/PVE004/qemu-server/400.conf

agent: 1,fstrim_cloned_disks=1

args: -uuid 00000000-0000-0000-0000-000000000400

bios: ovmf

boot: order=sata0;ide0

cores: 2

cpu: host

efidisk0: PVNAS1-Vm:400/vm-400-disk-1.qcow2,size=128K

hostpci0: 0000:82:00.0,device-id=0x1e30,mdev=nvidia-266,sub-device-id=0x129e,sub-vendor-id=0x10de,vendor-id=0x10de,x-vga=1

ide0: none,media=cdrom

machine: pc-q35-7.1

memory: 22528

name: vPC62

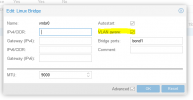

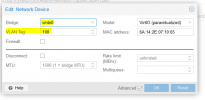

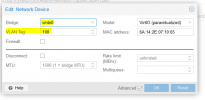

net0: virtio=6A:14:2E:07:10:65,bridge=vmbr0,tag=100

numa: 1

onboot: 1

ostype: win10

sata0: PVNAS1-Vm:400/vm-400-disk-0.qcow2,cache=writeback,discard=on,size=50G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=82c4495f-dd27-4ff7-aae4-fcd73bf8d785

sockets: 2

tpmstate0: PVNAS1-Vm:400/vm-400-disk-0.raw,size=4M,version=v2.0

vmgenid: ac40cb95-1645-40eb-9e43-2f230833bda8Thank you and best regards