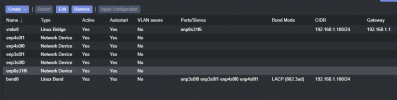

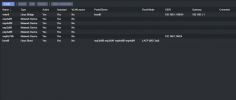

I had install PVE7 with my mobo integrated 1GbE card as main interface. Server is connected to Dlink switch that have also bond options. I added pcie quad 1GbE card, connected by 4 cables to switch and bond them in Dlink configuration. In Proxmox I created bond0 and add 4 ports of quad GbE card to it so part of my plan was done.

I wanted to use quad card with bond connection (instead of integrated) as main Proxmox interface and main for all VMs instead of vmbr0.

I fallowed this post but after that I lost connection and had to do nano /etc/network/interfaces so to get my server working. Now I am back on the begining and dont know how to do it. Can I get some help?

I wanted to use quad card with bond connection (instead of integrated) as main Proxmox interface and main for all VMs instead of vmbr0.

I fallowed this post but after that I lost connection and had to do nano /etc/network/interfaces so to get my server working. Now I am back on the begining and dont know how to do it. Can I get some help?

Attachments

Last edited: