I've recently been working in a place that has a 7 Node Cluster with Ceph running on them. The Cluster consists of 3 Computer nodes and 4 storage nodes. The storage nodes have 12 x Enterprise HDD while the computer nodes have some 5 x Enterprise 2.5" SSD in them. So while the 3 compute nodes are the ones that mostly do the running of virtual machines, there are SSD in each of the 3 compute nodes which are in the CEPH cluster.

There are maps setup to create pools with SSD only and HDD only. The nodes are linked via dual 10GB networking on each Node, so data throughput "Should" be decent.

When under loads the CEPH cluster doesn't actually appear to perform as well as I'd expect, I know this is a vague statement but it just feels laggy and not as responsive as I'd expect. I've done some tests with various bits of software and the more you push it with repeated tests, the slower it gets (to a point). So my question is this, what's the best way to test CEPH speed and what would people expect?

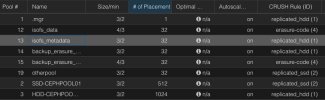

I've also attached an image showing the pool setup, I can see one thing I assume could be impacting performance, Meta is on HDD, is this an issue? Would simply editing eth pool and selecting SSD instead of HDD be worthwhile? Would moving It to be on SSD require anything more than just changing the location in the pool settings before doing it? It's a live cluster after all.

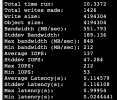

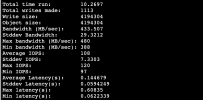

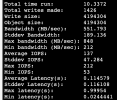

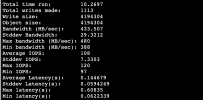

I've also attached some tests, you'll see there's not massive difference between the SSD and the HDD

There are maps setup to create pools with SSD only and HDD only. The nodes are linked via dual 10GB networking on each Node, so data throughput "Should" be decent.

When under loads the CEPH cluster doesn't actually appear to perform as well as I'd expect, I know this is a vague statement but it just feels laggy and not as responsive as I'd expect. I've done some tests with various bits of software and the more you push it with repeated tests, the slower it gets (to a point). So my question is this, what's the best way to test CEPH speed and what would people expect?

I've also attached an image showing the pool setup, I can see one thing I assume could be impacting performance, Meta is on HDD, is this an issue? Would simply editing eth pool and selecting SSD instead of HDD be worthwhile? Would moving It to be on SSD require anything more than just changing the location in the pool settings before doing it? It's a live cluster after all.

I've also attached some tests, you'll see there's not massive difference between the SSD and the HDD