Hi, I'm trying to get memory ballooning to work on Proxmox 8.4.14 with Windows 2022 +VirtioIO 1.266 (it also fails on Win 2012).

Basically, I set it to a minimum of 2G and a maximum of 6G. Over time, the RAM reaches 100% (although a process consumes it).

I only managed to free up RAM by disabling the VirtioIO Balloon Driver from Device Manager. (If I re-enable it, the RAM slowly grows again.)

This happens when I start copying files or running MSSQL.

vm.conf:

agent: 1

balloon: 2048

bios: ovmf

boot: order=ide0;virtio1

cores: 5

cpu: host

efidisk0: zpool_4T:vm-104-disk-2,efitype=4m,pre-enrolled-keys=1,size=1M

ide0: none,media=cdrom

machine: pc-q35-8.1

memory: 6144

meta: creation-qemu=8.1.5,ctime=1735219775

name: DESA

net0: virtio=BC:24:11:3C:70:CA,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: win11

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=4bc1976b-1a98-4b51-91cc-16bde73dd072

sockets: 2

vga: std

virtio1: zpool_4T:vm-104-disk-1,aio=threads,size=83G

virtio2: zpool_4T:vm-104-disk-0,iothread=1,size=2T

vmgenid: 0f086493-dddd-480a-b0af-fe8fa244a42d

vmstatestorage: zpool_4T

Some images

1Ram_ini.PNG:

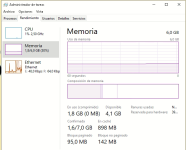

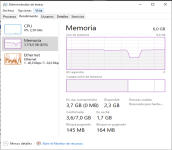

RAM status at startup SO

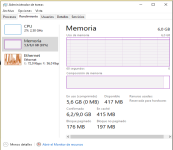

2Ram_Fin-Full.PNG

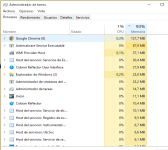

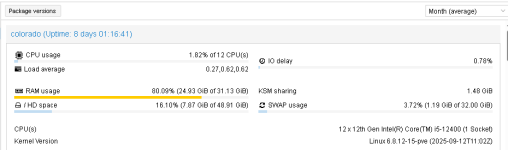

2Ram_Fin-Full-processes.PNG

The status is at 93% RAM. If I leave it for another 24 hours, it reaches 100% (but I couldn't take the picture)

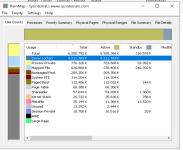

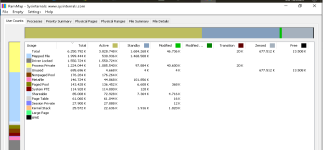

3Ram_Fin-Full-RamMap.PNG

You can see that the consumption is due to the locked driver, which appears to be the Balloon Driver.

Honestly, I've tried disabling KSM, trying different versions of the virtioIO driver, and other AI recommendations.

But I can't find a way to fix it.

Should ballooning be working? Or is there some kind of bug?

Thank you for coming this far.

Basically, I set it to a minimum of 2G and a maximum of 6G. Over time, the RAM reaches 100% (although a process consumes it).

I only managed to free up RAM by disabling the VirtioIO Balloon Driver from Device Manager. (If I re-enable it, the RAM slowly grows again.)

This happens when I start copying files or running MSSQL.

vm.conf:

agent: 1

balloon: 2048

bios: ovmf

boot: order=ide0;virtio1

cores: 5

cpu: host

efidisk0: zpool_4T:vm-104-disk-2,efitype=4m,pre-enrolled-keys=1,size=1M

ide0: none,media=cdrom

machine: pc-q35-8.1

memory: 6144

meta: creation-qemu=8.1.5,ctime=1735219775

name: DESA

net0: virtio=BC:24:11:3C:70:CA,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: win11

scsihw: virtio-scsi-pci

serial0: socket

smbios1: uuid=4bc1976b-1a98-4b51-91cc-16bde73dd072

sockets: 2

vga: std

virtio1: zpool_4T:vm-104-disk-1,aio=threads,size=83G

virtio2: zpool_4T:vm-104-disk-0,iothread=1,size=2T

vmgenid: 0f086493-dddd-480a-b0af-fe8fa244a42d

vmstatestorage: zpool_4T

Some images

1Ram_ini.PNG:

RAM status at startup SO

2Ram_Fin-Full.PNG

2Ram_Fin-Full-processes.PNG

The status is at 93% RAM. If I leave it for another 24 hours, it reaches 100% (but I couldn't take the picture)

3Ram_Fin-Full-RamMap.PNG

You can see that the consumption is due to the locked driver, which appears to be the Balloon Driver.

Honestly, I've tried disabling KSM, trying different versions of the virtioIO driver, and other AI recommendations.

But I can't find a way to fix it.

Should ballooning be working? Or is there some kind of bug?

Thank you for coming this far.