Hello! (also sorry, pretty new here and proxmox in general)

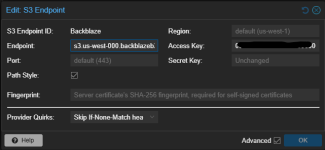

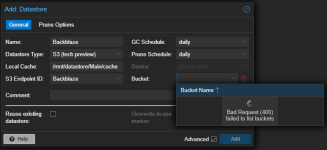

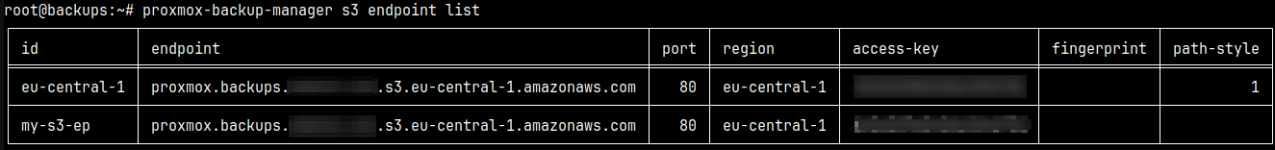

Have been trying to set up an S3 endpoint to check the new functionality since the Beta, was never able to make it work. The access token I am using has full control over aws s3, but when trying to create a datastore using an s3 endpoint I created, I just get the error from the title.

none of these works. Have even tried an admin account...

Have been trying to set up an S3 endpoint to check the new functionality since the Beta, was never able to make it work. The access token I am using has full control over aws s3, but when trying to create a datastore using an s3 endpoint I created, I just get the error from the title.

none of these works. Have even tried an admin account...

Last edited: