Hello there, so i got a PROXMOX 6.3.2 so the last version and update today

So i got a NAS QNAP with 1GIGAbites network :

so now on my PROXMOX i got a 1gb network card to :

On proxmox i don't know how found the speed of my network card even on command line :/

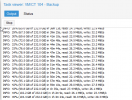

And when i backup my VM look the speed of the backup :

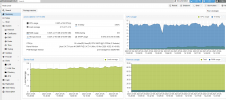

On graphics i got this :

Look the speed i got a max to 75 Mb ??

i got a 1 gb link what is wrong please ?

Do u know how to speed my netwokr with PROXMOX on my local network with 1 Giga lan please

thanks

So i got a NAS QNAP with 1GIGAbites network :

so now on my PROXMOX i got a 1gb network card to :

On proxmox i don't know how found the speed of my network card even on command line :/

And when i backup my VM look the speed of the backup :

On graphics i got this :

Look the speed i got a max to 75 Mb ??

i got a 1 gb link what is wrong please ?

Do u know how to speed my netwokr with PROXMOX on my local network with 1 Giga lan please

thanks