6-node cluster, all running latest PVE and updated. Underlying VM/LXC storage is ceph. Backups -> cephfs.

In the backup job, syncfs fails, and then the following things happen.

•The node and container icons in the GUI have a grey question mark, but no functions of the UI itself appear to fail

•The backup job cannot complete or be aborted

•The VMs cannot be migrated

•The node cannot be safely restarted and must be hard reset

•pct functions fail (cannot shutdown, migrate, enter)

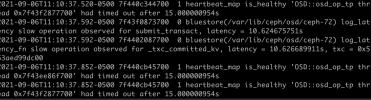

This is new behavior in Proxmox 7.0. It's happening repeatedly on multiple nodes. There does not appear always to be a clear pattern of specific containers, but I will investigate this more thoroughly.

In the backup job, syncfs fails, and then the following things happen.

•The node and container icons in the GUI have a grey question mark, but no functions of the UI itself appear to fail

•The backup job cannot complete or be aborted

•The VMs cannot be migrated

•The node cannot be safely restarted and must be hard reset

•pct functions fail (cannot shutdown, migrate, enter)

This is new behavior in Proxmox 7.0. It's happening repeatedly on multiple nodes. There does not appear always to be a clear pattern of specific containers, but I will investigate this more thoroughly.

Code:

INFO: starting new backup job: vzdump --quiet 1 --pool backup --compress zstd --mailnotification failure --mailto jeremy@idealoft.net --mode snapshot --storage cephfs

INFO: filesystem type on dumpdir is 'ceph' -using /var/tmp/vzdumptmp1254910_102 for temporary files

INFO: Starting Backup of VM 102 (lxc)

INFO: Backup started at 2021-07-30 00:00:02

INFO: status = running

INFO: CT Name: xxxx

INFO: including mount point rootfs ('/') in backup

INFO: excluding bind mount point mp0 ('/mnt/xxxx') from backup (not a volume)

INFO: found old vzdump snapshot (force removal)

rbd error: error setting snapshot context: (2) No such file or directory

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

Creating snap: 10% complete...

Creating snap: 100% complete...done.

/dev/rbd4

INFO: creating vzdump archive '/mnt/pve/cephfs/dump/vzdump-lxc-102-2021_07_30-00_00_02.tar.zst'

INFO: Total bytes written: 2504898560 (2.4GiB, 6.4MiB/s)

INFO: archive file size: 686MB

INFO: prune older backups with retention: keep-daily=7, keep-last=22, keep-monthly=1, keep-weekly=4, keep-yearly=1

INFO: pruned 0 backup(s)

INFO: cleanup temporary 'vzdump' snapshot

Removing snap: 100% complete...done.

INFO: Finished Backup of VM 102 (00:06:24)

INFO: Backup finished at 2021-07-30 00:06:26

INFO: filesystem type on dumpdir is 'ceph' -using /var/tmp/vzdumptmp1254910_109 for temporary files

INFO: Starting Backup of VM 109 (lxc)

INFO: Backup started at 2021-07-30 00:06:26

INFO: status = running

INFO: CT Name: xxxxx

INFO: including mount point rootfs ('/') in backup

INFO: excluding bind mount point mp0 ('/mnt/xxxxx') from backup (not a volume)

INFO: excluding bind mount point mp2 ('/mnt/xxxxx') from backup (not a volume)

INFO: found old vzdump snapshot (force removal)

rbd error: error setting snapshot context: (2) No such file or directory

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

Creating snap: 10% complete...

Creating snap: 100% complete...done.

/dev/rbd4

INFO: creating vzdump archive '/mnt/pve/cephfs/dump/vzdump-lxc-109-2021_07_30-00_06_26.tar.zst'

INFO: Total bytes written: 3282534400 (3.1GiB, 2.5MiB/s)

INFO: archive file size: 1.16GB

INFO: prune older backups with retention: keep-daily=7, keep-last=22, keep-monthly=1, keep-weekly=4, keep-yearly=1

INFO: pruned 0 backup(s)

INFO: cleanup temporary 'vzdump' snapshot

Removing snap: 100% complete...done.

INFO: Finished Backup of VM 109 (00:22:55)

INFO: Backup finished at 2021-07-30 00:29:21

INFO: filesystem type on dumpdir is 'ceph' -using /var/tmp/vzdumptmp1254910_113 for temporary files

INFO: Starting Backup of VM 113 (lxc)

INFO: Backup started at 2021-07-30 00:29:21

INFO: status = running

INFO: CT Name: xxxxx

INFO: including mount point rootfs ('/') in backup

INFO: excluding bind mount point mp0 ('/mnt/xxxxx') from backup (not a volume)

INFO: excluding bind mount point mp1 ('/mnt/xxxxx') from backup (not a volume)

INFO: including mount point mp2 ('/db') in backup

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: suspend vm to make snapshot

INFO: create storage snapshot 'vzdump'

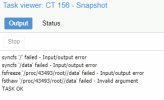

syncfs '/' failed - Input/output error

Last edited: